Team9 is writing a series of articles about deepfakes and related detection technologies. Today, let’s go through various cases of deepfakes. Results of media synthesis by the Generative Adversarial Network (GAN) are like two sides of a coin, having both positive and negative aspects. GAN can create images of living and non-living objects, such as human faces, living rooms, buses, cars, and parking lots. GAN's scope and related technologies have expanded beyond virtual images to include video and audio. While it offers limitless digital content production technology and business opportunities, it also carries negative aspects as it can be used in crime.

Positive cases of deepfake technology

The following cases show where deepfake technology was used with positive results. The most representative case is realizing high-quality media using new digital content technologies.

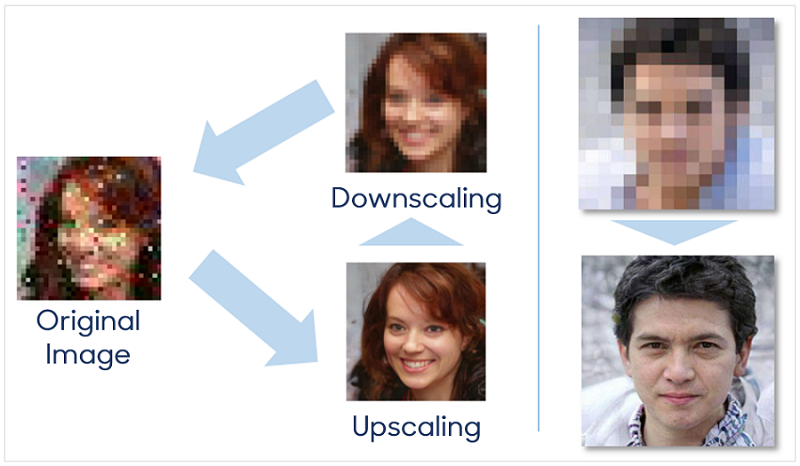

In a 2020 paper, Duke University in the US introduced a method of generating an optimal image by continuously upscaling or downscaling* a low-quality image while comparing it with the original copy.

* Upscaling or downscaling means to increase or decrease the original size of an image or video.

Similar technologies were applied to ultra-high-definition TVs. Global top-tier TV manufacturers in Korea, optimized video quality based on video and sound learned by AI; and provided abundant user experience by upscaling to ultra-high resolution. As seen from the photo, a full HD image (1,920 x 1,080 pixels) was upscaled to 4K (approx. 4,000 pixels in width) and further to 8K (approx. 8,000 pixels in width).

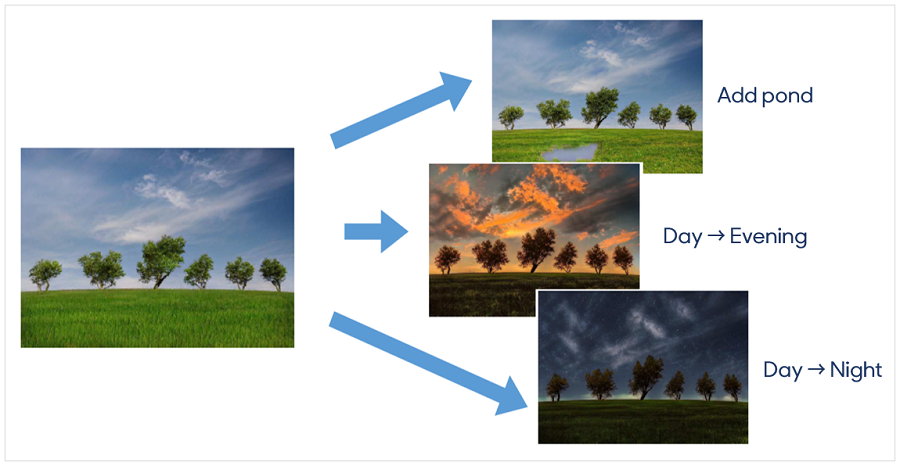

The technology jointly announced by Adobe (creator of Photoshop) and the University of California Berkeley in the US can insert an image of a pond into another image or even change the background to make it evening or night. These techniques are widely used in photography, film, and art. However, the distinction between this new technology and existing computer graphics manipulated by people is becoming vague.

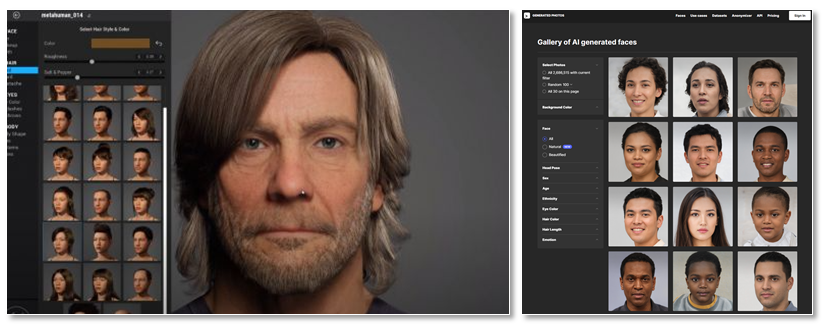

As for virtual humans, Gartner, a major IT technology analysis and consulting firm, defines virtual humans and related technologies as the "Digital Twin of the Person" (2020) and as" Digital Humans" (2021). Gartner predicted that these technologies will arrive in five to ten years. These virtual humans were used earlier in advanced IT countries. In Korea, a cyber singer Adam, a virtual human, debuted in 1998.

GAN can create any type of virtual human in terms of race, gender, age, and facial expression. It can be used indefinitely without legal issues with portrait rights.

Source : Epic Games Meta Human Center (left), Generated Photos (right)

Source : Epic Games Meta Human Center (left), Generated Photos (right)

Next up are virtual influencers, meaning virtual humans who are influential on social media. In Korea, there is a virtual influencer Rozy, who is a 22-year-old female character. Created using AI and CG, she is a model for a Korean bank. Rozy posts photos of herself traveling worldwide on her Instagram account and communicates with her fans on the platform, in tune with the Millennial and Gen Z culture. Rozy even appeared on a radio program, a first of its kind, thanks to AI audio synthesis technology. The company which made Rozy, is reportedly enjoying approximately USD 1.53 million in revenue and an even bigger advertising effect. Above all, Rozy is free from concerns that she may damage the company's image because, as a virtual human, she has no risks of being involved in private life issues, scandals, or school violence.

Virtual humans can be seen in various aspects of life, such as AI anchors created with real announcers and AI candidates who join presidential elections. As mentioned above, AI can create images of living and non-living things such as rooms, buses, cars, and parking lots, not to mention human faces. Visit the "This X Does Not Exist (thisxdoesnotexist)" site to see what GAN can create.

Negative cases of deepfake technology

Next, let's look at cases where deepfakes are used negatively and their related technologies. As technology rapidly advances and evolves, crimes related to deepfakes have become an issue in society. As fake images, videos, and voices created through deep learning become increasingly sophisticated, deepfake crimes such as fake news, monetary fraud, and illegal pornography are taking place worldwide.

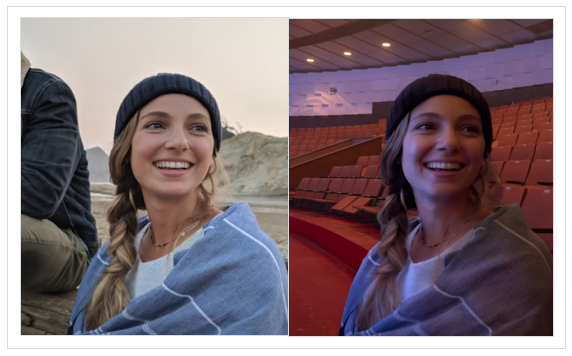

Example of person/environment replacement through deepfake technology (Source: Google Research 2021)

Example of person/environment replacement through deepfake technology (Source: Google Research 2021)

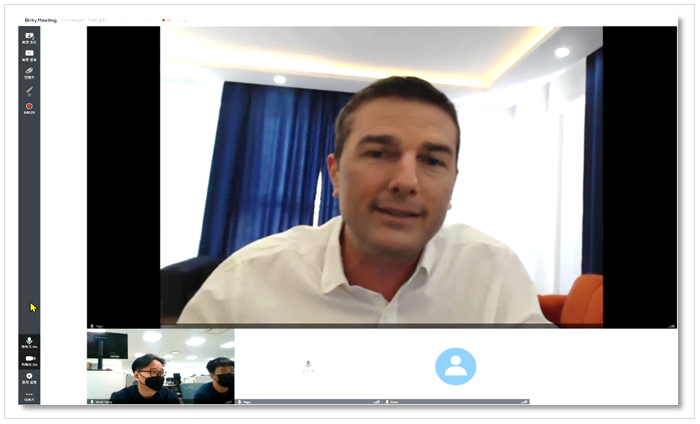

Recently in the US, a hacker created a deepfake facial image for an online interview and got the job. He even obtained access to the company's internal systems and attempted to steal the company's core data. The Federal Bureau of Investigation's (FBI) Internet Crime Complaint department (IC3) posted an official notice, warning US companies to be cautious during job interviews. Especially in large countries like the US, working remotely is common, so much of the recruiting process is done online. Therefore, applicants who utilize deepfake technologies, including DeepfaceLive, can easily create deepfake media for a crime.

Team9's partner applied deepfake of a celebrity in real-time during a Brity meeting

Team9's partner applied deepfake of a celebrity in real-time during a Brity meeting

Source : 1. Deepfakes and Stolen PII Utilized to Apply for Remote Work Positions (FBI document https://www.ic3.gov/Media/Y2022/PSA220628)

2. FBI warning: Crooks are using deepfake videos in interviews for remote gigs (https://www.theregister.com/2022/06/29/fbi_deepfake_job_applicant_warning)

Deepfake was also used by an adult to bully teenagers in the US for the first time. A woman whose daughter is a cheerleader made deepfake media related to her daughter's rivals. The mother was arrested for sending the fake file to the team coaches and friends.

In addition, there are various cases of maliciously using deepfake, such as financial fraud, digital sexual violence, opinion manipulation, and threatening national security.

Currently, AI and deepfake technology have developed beyond the level that skilled experts can identify. Therefore, even the public should be able to identify fake news through different platforms now. Team Nine will continue researching deepfake detection technologies to prevent crimes related damages. Finally, check out the following useful tips on how to respond to potential deepfake media.

□ How to Respond to Deepfake Media

Inspired by Microsoft, University of Washington. Used with Permission (2021)

1. If you encounter media that is suspected of being a deepfake, consider the media's impact on society.

2. There is no general rule to identifying deepfakes, but if you encounter deepfakes, look at how your emotions react to that photo or video.

3. If there is a photo or video of which you feel a strong emotion, stop what you are doing and ask yourself why you are feeling that way.

4. Have you shared funny or nice photos on social media off the top of your head? Now pause for a moment and search the photo or video on the Internet.

5. If you pay attention, you might soon realize that the image you saw may not be real.

Read previous articles on deepfake:

+ Which One Is Real? Generating and Detecting Deepfakes

+ What Are Cheapfakes (Shallowfakes)?

+ What Is Proactive Media Forgery Prevention?

※ This article was written based on objective research outcomes and facts, which were available on the date of writing this article, but the article may not represent the views of the company.

- AI Ethics and AI Governance - The Social Responsibility of AI

- Multimodal AI That Thinks Like Humans

- Damages of Media Forgery and Companies that Offer Forgery Prevention Technologies

- Brightics Visual Search Claims 6th Place in NIST FRVT “Face Mask Effects” Category

- Cheapfakes on the Rise in Zero Contact Environments