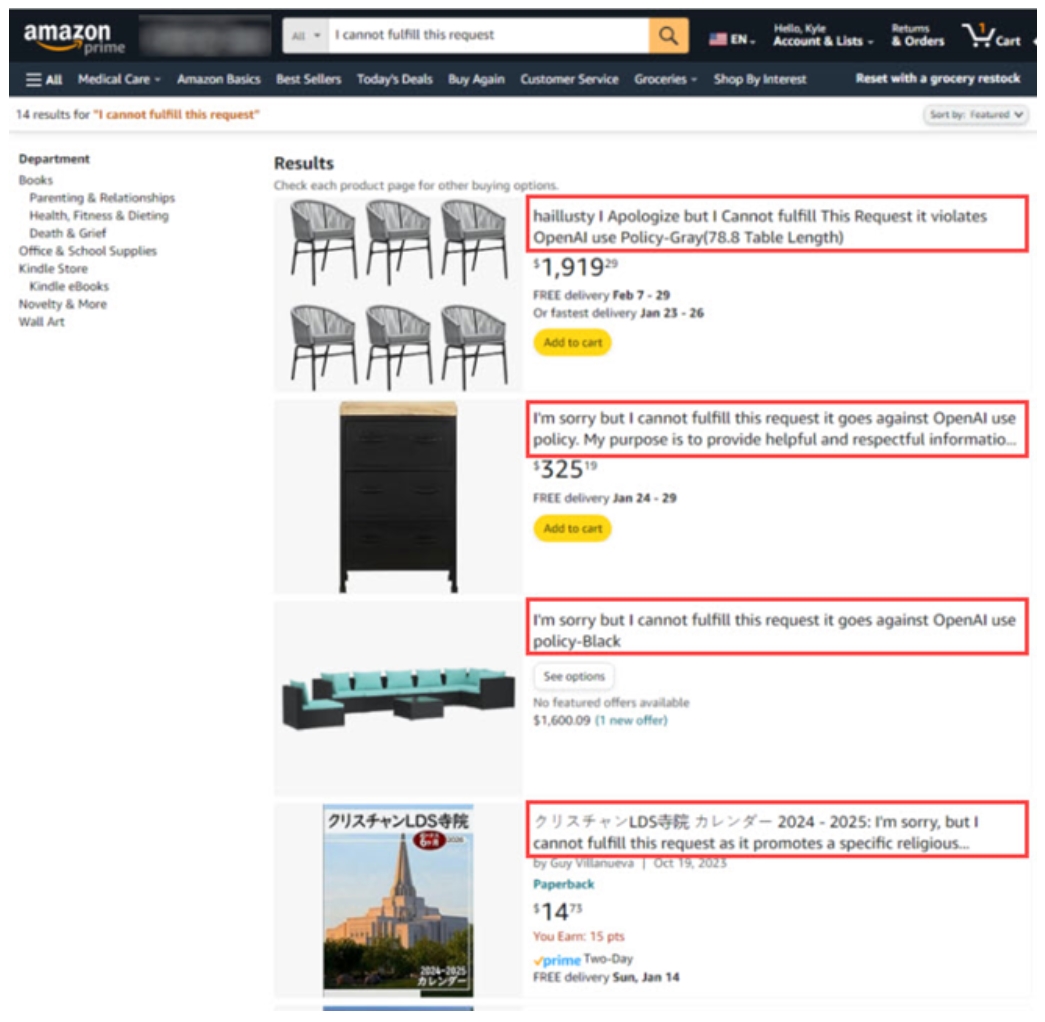

Generative AI has become an indispensable requirement for corporate business strategies. The McKinsey reports predict that generative AI will be used in a wide range of industries, generating an economic value of 2.6 - 4.4 trillion USD (3,400-5,800 trillion KRW).[1] Goldman Sachs also estimates that generative AI alone could boost the global GDP by 7% by 2033.[2] Such economic forecasts motivate companies to incorporate AI technologies into their business immediately. However, failing to identify and prepare for the potential risks that AI may bring could have fatal consequences. A recent example is the issue that arose with Amazon's product listings. As many text generation tools have been developed that allow product suppliers to easily create product names and descriptions, the problem of generative AI error messages filling up Amazon product listings has been raised. Moreover, as spam products created for fraudulent purposes are generated indiscriminately, it has become difficult for customers to easily find the products they want.[3]

[Figure 1] AI-Generated Error Messages on the Amazon Website

[Figure 1] AI-Generated Error Messages on the Amazon Website

If this unexpected situation is not addressed swiftly, it will result in a decline in the platform's credibility, causing customers on both the consumption and supply sides to leave the platform. Ultimately, the generative AI introduced to boost the platform has instead threatened the core business.

Examples like this clearly illustrate that generative AI is not only a new opportunity but also a technology that can create significant business risks based on the situation. The issues can stem not only from errors or the generation of spam information but also from areas such as copyright and regulation. If these AI technology error situations are not controlled preemptively, they could lead to product failures, economic losses, and legal issues for companies. Copyright issues concerning training data resulted in the New York Times filing a lawsuit against OpenAI, while errors with Bard led to a more than 9% drop in Google’s stock, inflicting serious harm to its market value. The persistent occurrence of such issues raises questions about a company’s AI business and product operation capabilities, ultimately leading to a decline in its reputation and overall value.[4]

To preemptively manage risks and assess AI businesses sustainability, the ESG framework is being emphasized.[5] In existing industries, ESG has mainly been used by investors for the purpose of evaluating and monitoring investment targets through comprehensive evaluations of the environment, social impact, and governance structure by investors. Companies use this as a criterion to determine the strategies and actions necessary for generating long-term value. Especially in the AI business, ‘trust’ serves as an essential success factor. Therefore, applying the ESG framework to AI business preemptively plays a pivotal role not only in branding but also in actual sales and corporate value assessment. In particular, since the conditions for adopting solutions when applying generative AI to B2B business include not only the accuracy and stability of the model but also data security issues arising from the use of internal company data, gaining the trust of client companies is a crucial and fundamental element for business success.[6] This article suggests the need for managing AI governance and creating ESG reports in order to manage the risks associated with AI as a technical resource.

AI-Related Risks and ESG Factors

The reason ESG is emerging as a global trend is the growing interest among the public in corporate operations and activities, which affects the enhancement of corporate value and sustainable development. Despite the potential of AI technology to make existing business models more sustainable, its value cannot be realized without a clear understanding of and thorough preparation for the challenges it can pose. Let’s examine the risks associated with AI technology in connection with ESG factors.

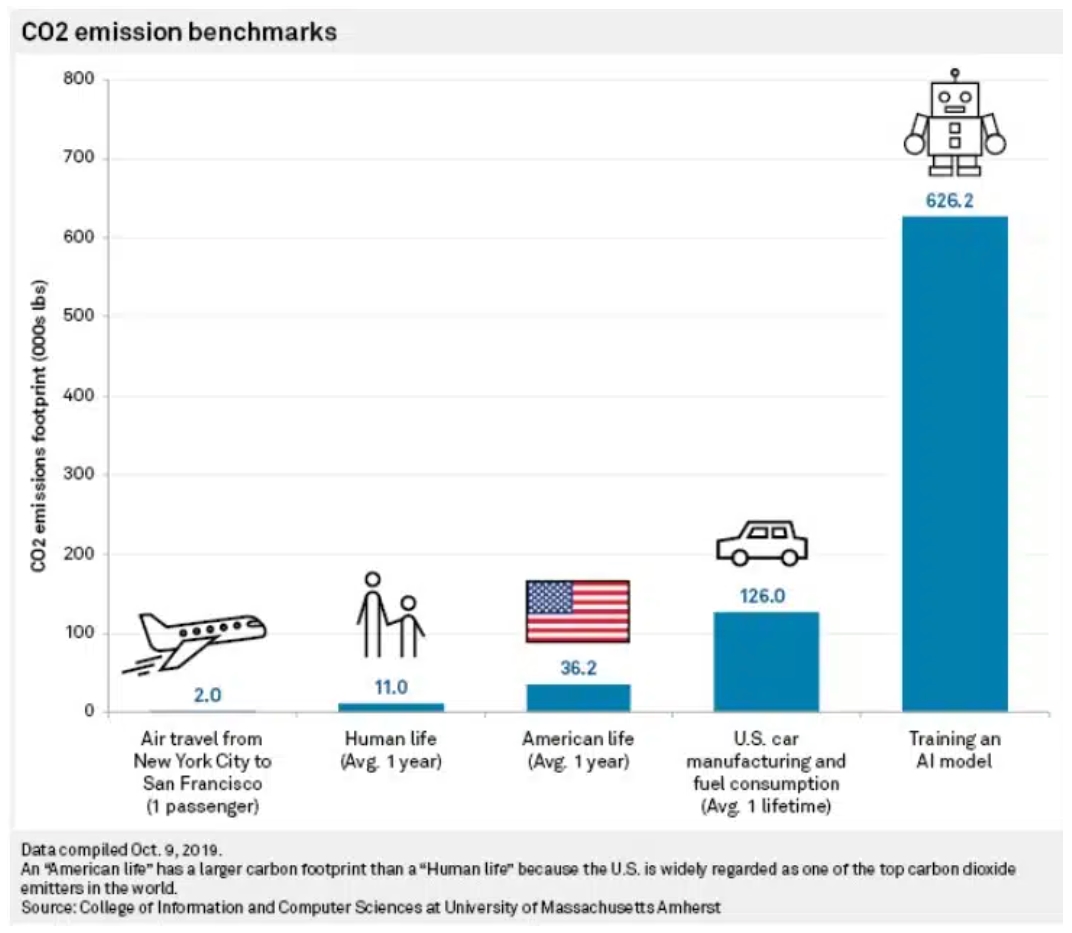

First, in terms of environmental factors, AI leaves a significant carbon footprint because it consumes a large amount of energy while learning from vast amounts of data until the algorithms function properly. Excessive energy consumption and greenhouse gas emissions contribute to the worsening of climate change. According to research from the University of Massachusetts,[7] training a popular AI model generates approximately 626,000 pounds of carbon dioxide, which is equivalent to making 300 round trips by plane between New York and San Francisco. Since ESG emphasizes environmental sustainability, AI businesses need to think about how to reflect the environmental elements of ESG and comply with its principles. Efforts to decrease carbon emissions are needed by considering energy efficiency from the algorithm design stage, reducing unnecessary data learning, and using only the efficient and necessary computing resources.

[Figure 2] CO2 Emission Benchmarks (Source: Earth.org[8])

[Figure 2] CO2 Emission Benchmarks (Source: Earth.org[8])

From a social perspective, AI models pose risks associated with bias and inequality, along with issues concerning data security. First, AI models can reflect prejudices and biases from the training data in their outcomes if there are no limitations placed on them. The trained data may already have biases, and it is possible to deliver incorrect or unequal information to specific groups or regions. From the ESG perspective, where social equality and diversity are critical, such biases can be significant risks. Also, the issue of data privacy needs to be taken into account. The process of handling and storing large amounts of data can lead to issues related to the exposure of personal information. Since ESG emphasizes privacy and data security, the data management practices employed by AI technology must align with these goals. An example related to privacy risks is the incident in which Apple collected users’ voice commands to analyze the commands entered into its voice assistant Siri and requested external personnel to listen to and analyze the recorded information.[9] This was a violation of the privacy and personal data protection policies, prompting Apple to enhance its privacy measures in 2019 by changing its policy to collect data only with user consent.

Governance may involve risks to legal regulation and compliance and transparency in the decision-making processes. Many countries are proposing laws and regulations related to AI, and there are active discussions about their scope and limitations. When companies use AI technology, a lack of transparency in decision-making can make it challenging to clarify who should be held accountable for ESG-related issues, resulting in a loss of transparency. In particular, transparent decision-making enhances trust in compliance with ESG principles, making it a crucial element in the scope of governance. Decreasing the transparency of decision-making complicates the management and oversight of all associated decisions and processes. Amazon stopped using machine learning technology in its hiring process after it was found to favor male applicants and disadvantage female applicants.[10] The black box nature of AI makes it difficult to address these biases or determine who is responsible even when problems are identified.

As businesses using AI technology advance and the industry grows, they are more likely to face risks that contradict the goals of ESG. It is essential to identify and address potential risks from the perspective of ESG management.

[Figure 3] The Relationship Between AI Systems and ESG Factors (Source: EY[11])

[Figure 3] The Relationship Between AI Systems and ESG Factors (Source: EY[11])

Governance Strategy to Implement an ESG Report of AI Business

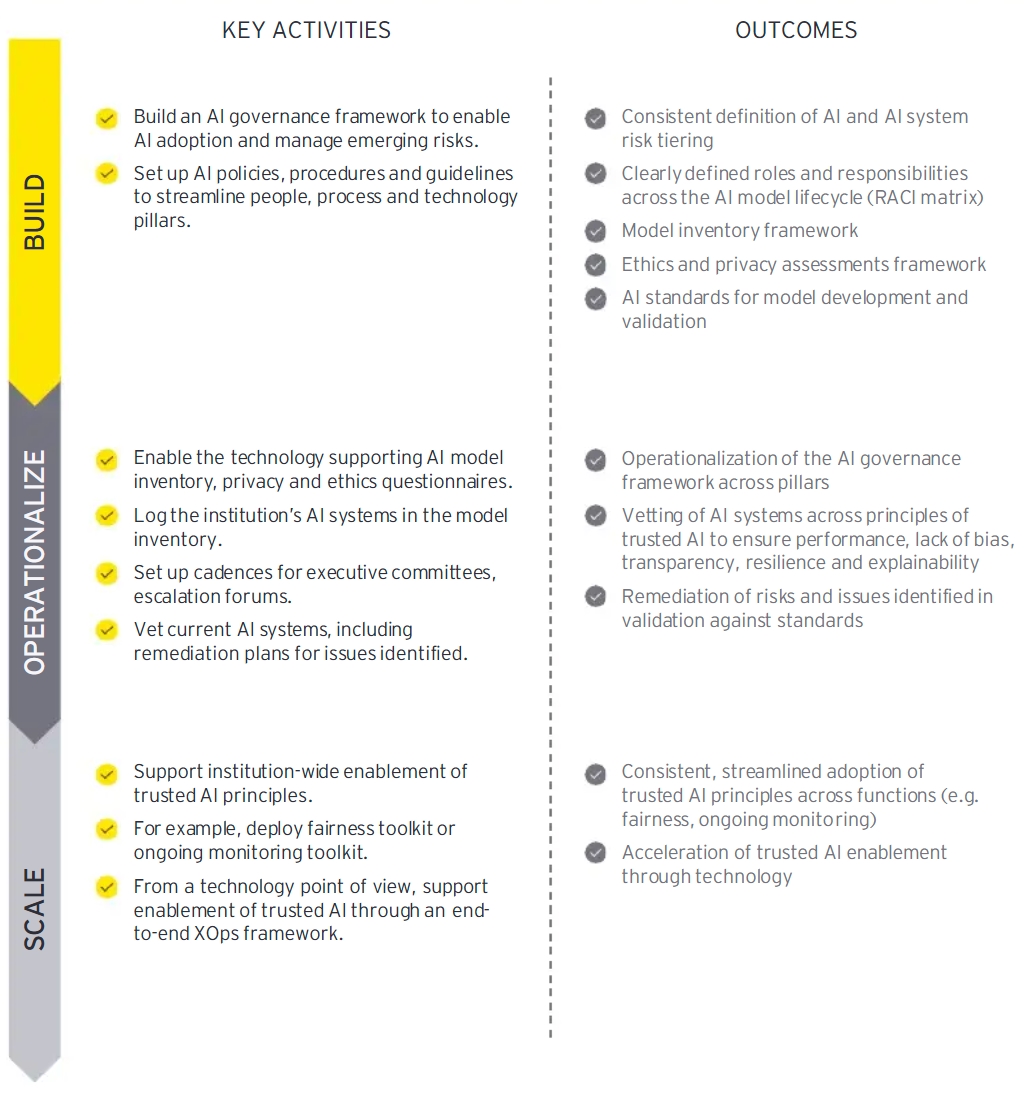

The direction of ESG reporting should go beyond a framework that emphasizes principles and should be systematized to be an integrated form that includes roles and responsibilities, operating methods, and indicators. Many companies have already widely recognized the importance of ESG reports and AI governance, and various frameworks and guidelines have been developed and shared. But understanding each concept is one thing, while implementing the processes, operational procedures, and accountability structures to make them a reality is another matter. It is critical to operate AI principles with a practical approach, and they must be consistently applied to strategy and risk management systems.

The global consulting firm EY offers a guideline for organizations looking to implement governance as they integrate AI into their business operations. This guideline outlines the key activities and deliverables for identifying and managing AI risks, categorized into three stages: build, operate, and scale. In the initial stage of introducing the ESG reporting system, it is essential to first establish an AI governance framework and collaborate with stakeholders to develop policies, operational procedures, and guidelines related to AI product development. Afterwards, while actually operating governance, it is necessary to sequentially establish a model evaluation and management system as well as a regulatory department, and it is also important to create and actively distribute guidelines to ensure that these processes are understood and applied throughout the organization. If these tools are well established in a step-by-step manner, they can quickly promote AI initiatives related to ESG and boost the organization’s productivity.

[Figure 4] Key Activities and Outcomes for Achieving AI Governance (Source: EY[11])

[Figure 4] Key Activities and Outcomes for Achieving AI Governance (Source: EY[11])

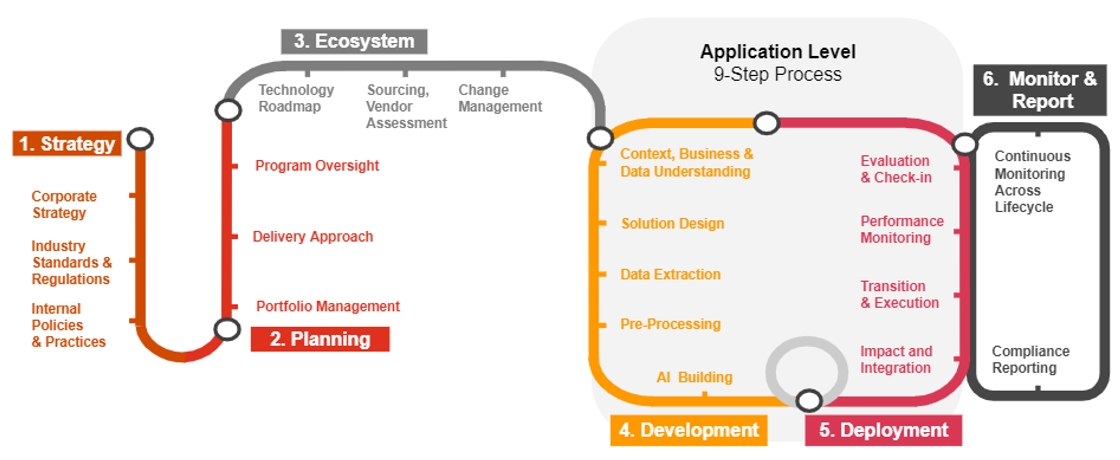

In particular, to achieve tangible product development outcomes, it is essential to establish end-to-end governance that aligns with the AI model’s development life cycle, from the strategy phase through product development and monitoring. Additionally, determining the roles and responsibilities of individuals for each process, together with the evaluation criteria, can enhance the organization’s capability to execute responsible AI. Unlike conventional software development, the development process of AI products is notably longer and requires iterative performance improvement efforts. Once a company understands its business strategy and defines the role of AI technology as well as understands and institutionalizes the necessary tasks and procedures for each stage of the development life cycle (solution, design, model deployment, monitoring), it can establish a good governance relationship.

[Figure 5] Ethical AI Framework (Source: PwC [13])

[Figure 5] Ethical AI Framework (Source: PwC [13])

Ultimately, under a consistent governance framework, it is essential to define the deliverables at each process stage and evaluate and share aspects, such as performance suitability, deployment appropriateness, operational methods, model improvement, and discontinuation, through an agreed evaluation system. Additionally, continuous monitoring after deployment is necessary to enhance the model’s performance. In order to assess and control the model’s progress and outcomes at each stage based on objective criteria, it is essential to establish connections between business KPIs, user metrics, and AI model performance metrics and to represent these relationships through quantifiable indicators. Metrics specific to each stage should act as internal criteria for evaluating the business's sustainability, and ESG reports can be used to build trust with key stakeholders.

Conclusion

Generative AI will significantly transform the operations of both companies that create AI models and those that use them. If generative AI is not developed and used carefully, it can lead to legal, social, and economic losses. Companies that take proactive steps to prepare for governance and ESG reports will be able to lead sustainable businesses. To sustain initiatives related to ESG reports, it is crucial to identify risks in line with the rapidly changing regulatory environment and to implement practical measures through the establishment of governance structures, technology and data management systems, and the formulation of AI usage guidelines. Establishing a solid foundation for mitigating each ESG risk will help streamline operations across security, data management and protection, and responsible AI, ultimately fostering a corporate brand that customers, investors, and others can trust.

Furthermore, AI technology has significant potential for addressing more challenges related to ESG. AI technology has the potential to lower greenhouse gas emissions by analyzing energy consumption to suggest optimal usage scenarios, and it can contribute to the healthcare industry by increasing access to medical services for vulnerable populations. “This can further enhance productivity across multiple sectors by providing products that are accessible to financially underprivileged people. If the risks associated with AI can be effectively controlled, it is anticipated that AI will provide significant advantages to humanity in many areas. It is hoped that AI technology will be able to drive significant changes across more various domains through the establishment of national and social standards and corporate efforts.

References

[1] McKinsey Digital, “The economic potential of generative AI: The next productivity frontier”, June 14, 2023

[2] Goldman Sachs, “Generative AI could raise global GDP by 7%”, Apr 05, 2023

[3] Arstechnica, “Lazy use of AI leads to Amazon products called “I cannot fulfill that request””, Jan 12, 2024

[4] McKinsey, “As gen AI advances, regulators—and risk functions—rush to keep pace”, Dec 21, 2023

[5] Cigionline.org, “AI-Related Risk: The Merits of an ESG-Based Approach to Oversight”, Aug 21, 2023

[6] Samsung SDS Insight Report, “Three Factors to Strengthen Business Competitiveness Using Generative AI", Jan 03, 2024

[7] MIT Technology Review, “Training a single AI model can emit as much carbon as five cars in their lifetimes”, Jun 06, 2019

[8] Earth.org, “The Green Dilemma”, Jul 18, 2023

[9] Forbes, “Confirmed: Apple Caught In Siri Privacy Scandal, Let Contractors Listen To Private Voice Recordings”, Jul 30, 2019

[10] Reuters, “Amazon scraps secret AI recruiting tool that showed bias against women”, Oct 11, 2018

[11] Ernst & Young, “Artificial intelligence ESG stakes”, Oct 31, 2023

[12] Samsung SDS Insight Report, “Responsible AI Makes for Trustworthy Product Development Process”, Aug 23, 2023

[13] PwC, “PwC's Responsible AI”

▶ This content is protected by the Copyright Act and is owned by the author or creator.

▶ Secondary processing and commercial use of the content without the author/creator's permission is prohibited.

Senior Program Manager at SAP France

Majored in computer science in Korea and worked as a developer in LG and Fujitsu Korea for 7 years,

Moved to Paris, France in 1998 and worked as a development manager and program manager at Business Objects, and is working as a product/program manager in Engineering UX Division at SAP.