Emergence of New Risks Due to Rapid AI Advancements

Public concerns about digital technology have mostly focused on the possibility of personal data leaks and abuse for the past decade. To alleviate these concerns, major countries have been working to establish legal and institutional measures, such as the EU’s General Data Protection Regulation (GDPR), which allows Internet users to control their personal data.

However, as AI became the hot topic with the emergence of ChatGPT 3.5 at the end of 2022, AI technology began to be adopted in a wide range of areas, such as recruitment, credit assessment in the private sector, and administrative process and judicial support in the public sector. Accordingly, public concerns and interest have shifted to the way data is collected and used in complicated and evolving algorithms. This is because AI has instead resulted in several unforeseen negative consequences, such as reinforcing social discrimination and providing plausible false information, contrary to the expectation that AI would always make rational decisions based on objective data.

The side effects caused by AI can be broadly categorized into three types. The first is exposure to biased, toxic, and erroneous information. Since AI learns from data collected from the real world, it can exclude or favor certain groups, contain harmful content such as violence, and provide false answers. The second is the copyright issue. When generated content references or copies existing works, intentionally or unintentionally, the issue of intellectual property infringement, which has been reported frequently these days, may arise. Also, there is an ongoing discussion about the extent to which copyright should be recognized for creative works, such as poetry and novels, generated by AI. The third is the issue of information protection, particularly the risk of personal data and internal corporate information leaks resulting from AI.

Table 1. Types of Side Effects/Controversies Caused by AI.

| ① Exposure to Biased, Toxic, and Erroneous Information |

|

| ② Copyright Issue |

|

| ③ Information Protection |

|

For example, one empirical study sparked controversy by revealing that Facebook ads, which provide AI-based customized advertising services to users, are biased based on race and gender. Job advertisements for supermarket cashiers were displayed to about 85% of women, while taxi driver ads reached around 75% of Blacks. Furthermore, housing sale advertisements were found to be exposed more frequently to whites, whereas rental ads were relatively more directed at Blacks. The public sector was not much different. The UK Home Office has been using AI to process visa applications, but reports have shown that applications from countries with larger non-white populations than white people are being delayed for no reason. Ultimately, the Home Office declared that it would assess the processing system’s functionality and stop using AI.

Copyright issues are also being continuously raised. AI primarily learns from existing data, some of which is subject to copyright protection. AI is trained to use this data to generate written content that emulates a specific style or responds in a certain way. These results may be similar or even identical to existing works protected by copyright. At the end of 2023, for example, The New York Times filed a lawsuit against OpenAI, claiming that AI companies are infringing on its intellectual property rights by using its vast content without permission. Furthermore, there is substantial controversy over the extent to which AI-generated works should be protected by copyright. In light of this debate, the Korean government released the "AI Copyright Guideline" in December 2023, making it clear that AI-generated content cannot be classified as copyrighted works and cannot be subject to copyright registration. However, these guidelines do not legislate copyright regulations for AI-generated works, and no overseas countries have yet enacted laws governing copyright for AI creations. There has yet to be a societal consensus on how much human creativity must be included in AI-generated works to qualify for copyright protection.

There has been a significant rise in instances of privacy violations caused by AI. Global companies such as Apple, Google, and Microsoft collected users’ voices with the excuse of training AI, and Apple, in particular, faced controversy for collecting sensitive personal information, such as users’ medical and credit data, to train Siri.

In response to the diverse risks associated with AI, leading countries are increasingly working to define high-risk AI and establish classification criteria through legislation. The EU AI Act (Artificial Intelligence Act, hereinafter referred to as the "EU AI Act"), which will be explained later, divided AI risks into four levels: △ minimal or low risk, △ limited risk, △ high risk, and △ unacceptable risk, and applied differentiated regulations based on the risk level. The Act categorized systems that can affect access to education, employment, and financial services, such as important infrastructure, interviews, or loan screening, and that use AI in a 'high risk' manner and whose AI was used for purposes of social discrimination or human behavior manipulation, as ‘unacceptable’ AI.

Also, the United States’ Algorithmic Accountability Act has classified AI that is involved in important decisions that can have a substantial impact on humans, such as education and job training, including assessment and certification, financial and legal services, including mortgage loans and credit, and essential infrastructure, including electricity and water, as ‘high risk’ AI.

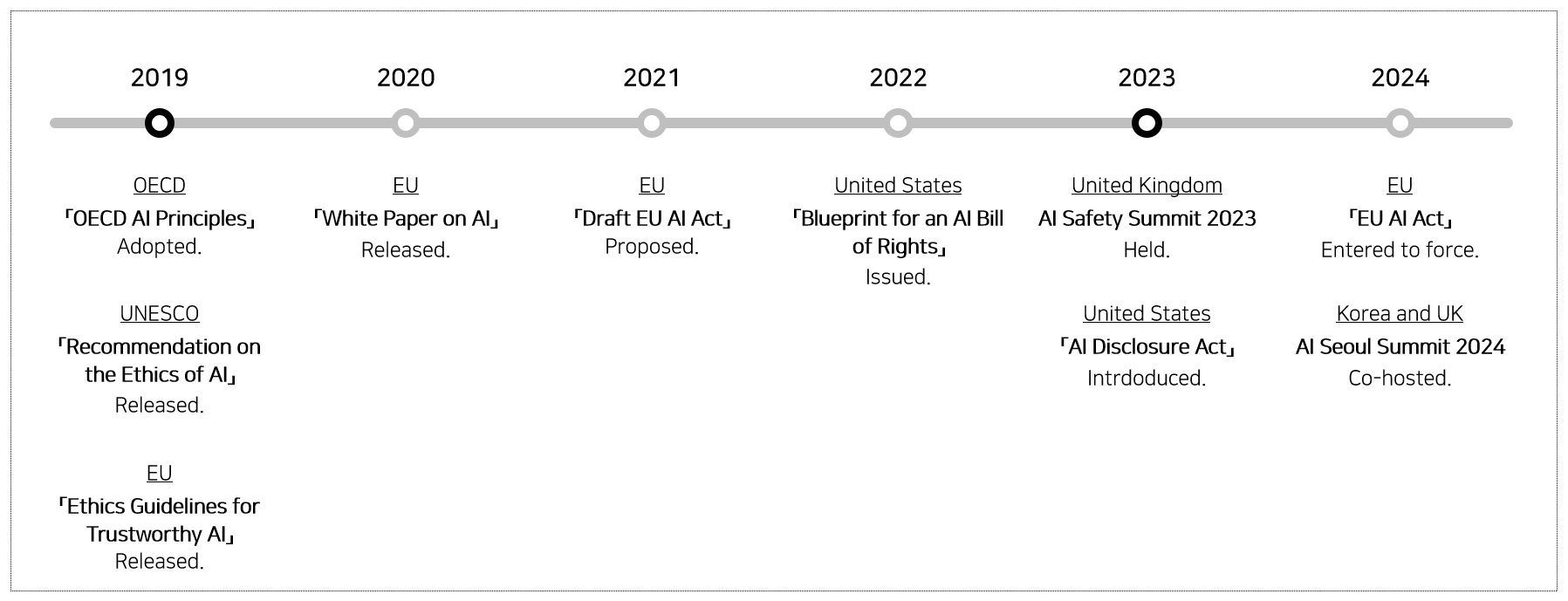

To prevent the negative impacts of AI that could have a significant impact on human life, various efforts have been made since the late 2010s, including declarations, recommendations, and legislation by 1) multilateral conferences, 2) international organizations, and 3) the governments of various countries.

Global Trends in AI Risk Management

Figure 1. Major Regulations and Policies for AI Risk Response.

Figure 1. Major Regulations and Policies for AI Risk Response.

- 2019

-

- OECD「OECD AI Principles」 Adopted.

- UNESCO「Recommendation on the Ethics of AI」 Released.

- EU「Ethics Guidelines for Trustworthy AI」 Released.

- 2020

-

- EU「White Paper on AI」 Released.

- 2021

-

- EU「Draft EU AI Act」 Proposed.

- 2022

-

- United States「Blueprint for an AI Bill of Rights」 Issued.

- 2023

-

- Inited Kingdom AI Safety Summit 2023 Held.

- United States「AI Disclosure Act」 Intrdoduced

- 2024

-

- EU「EU AI Act」Entered to force.

- Korea and UK AI Seoul Summit 2024 Co-hosted.

1) Multilateral Meetings - AI Summit

The first-ever AI Summit can be mentioned as part of the international community’s effort to address AI risks. The AI Safety Summit was held at Bletchley Park in England on November 1 and 2, 2023. At the AI Summit, held to address the need for international cooperation to minimize the risks that AI may pose to humanity, 28 countries, including Korea, the US, and China, agreed to announce a joint declaration on AI safety.

The main points of the agreement include: △ designing, developing, and deploying AI in a human-centered, trustworthy, and responsible manner; △ developing AI that benefits everyone in areas such as education, public services, and security; △ imposing responsibilities for ensuring AI safety on all stakeholders using AI, particularly developers; △ finding solutions to AI issues based on strong international cooperation.

It is significant that, for the first time, leaders from major countries gathered to discuss safe and sustainable AI and issued a joint declaration, and it is noteworthy that the importance of developer responsibility and international cooperation was highlighted. However, as this was the first meeting, it was limited to merely issuing a joint declaration rather than presenting specific implementation measures. Nevertheless, to prepare specific implementation measures for the declaration made at the summit and to conduct a mid-term review of follow-up actions after the summit, a joint South Korea-UK AI Summit is scheduled to take place again in May of this year.

2-1) OECD AI Recommendations

In May 2019, the OECD adopted the "OECD Recommendations on AI" (Council Recommendation on AI) to implement sustainable and trustworthy AI. Specifically, the recommendation encompasses principles for AI that are innovative, trustworthy, and respect human rights and democratic values. To implement this, the recommendations emphasize the following: △ First, efforts to implement AI to advance inclusion, promote welfare, and reduce social inequality; △ Second, respect for human-centered values such as freedom, privacy, equality, and diversity throughout the life cycle of AI systems; △ Third, efforts by AI developers to promote the understanding of AI systems and secure transparency and explainability; △ Fourth, systematic overcoming of risks such as privacy and digital security throughout the entire life cycle; and △ Fifth, the responsibility of AI developers to ensure that AI systems remain up-to-date and properly functional. The statement takes the form of a recommendation, which has a stronger binding force than a declaration, and it is significant for being the first to emphasize inclusiveness, fairness, diversity, and transparency in AI, establishing norms for AI in the international community.

2-2) UNESCO Recommendation on the Ethics of Artificial Intelligence

In November 2019, UNESCO announced the "Recommendation on the Ethics of Artificial Intelligence" at the UNESCO General Conference. The recommendation includes four core values, 10 principles, and 11 policy recommendations that AI should pursue. First, the four core values that AI should strive for are: △ respect for human rights, freedom, and humanity; △ flourishing of the environment and ecosystem; △ guaranteeing diversity and inclusion; and △ a peaceful, just, and interconnected life. The 10 principles include conducting AI impact assessments, prohibiting the use of AI systems for the purpose of public surveillance, and designing participatory and cooperative governance. This recommendation is significant as it represents the first global agreement on AI, signed by 193 UNESCO member states. However, like the OECD recommendation, the international organizations' recommendations are essentially non-binding and contain a lot of overlapping content with the OECD guidelines. Moreover, it is limited by the fact that it excludes the United States due to its withdrawal from UNESCO.

3-1) EU AI Act

The recommendations from international organizations (OECD and UNESCO) established principles for implementing transparency and fairness in AI systems and served as a milestone for preventing AI discrimination. However, their effectiveness is constrained by their advisory nature. Therefore, with the rapid growth of AI, there was a growing need for legislation that can regulate AI-related issues to prevent negative impacts, such as bias, discrimination, and violation of privacy rights, and to ensure the safer utilization of AI.

The EU issued the "Ethics Guidelines for Trustworthy AI" in 2019 and the "White Paper on AI" in 2020, followed by the preparation of a draft for the EU AI Act in April 2021 to establish a unified framework for AI regulation within Europe. Since then, as new technologies, such as generative AI and GPT, emerged and new issues arose, the EU revised the draft, and finally, in October 2023, the 27 EU member states unanimously agreed on the "EU AI Act," an AI technology regulation bill, and in March 2024, the EU Parliament passed the bill in a plenary session. While it still requires approval from each country, it essentially passed the major hurdle.

First, the EU classified the risks of AI into four stages based on the bill (△ minimal or no risk; △ limited risk; △ high risk; △ unacceptable risk) and outlined the obligations to be adhered to for each level.

Specifically, first, it stipulates human supervision of high-risk AI systems. It established the principle that high-risk AI systems should be designed and developed in a way that allows for human supervision during the manufacturing process.

Second, the obligations that high-risk AI system operators must comply with were established. For example, high-risk AI system operators are required to complete a conformity assessment before launching products or starting services. The conformity assessment analyzes the potential risks of high-risk AI systems, determines whether measures have been taken to mitigate their impact on fundamental rights and safety, and evaluates the appropriateness of the data used.

Third, it stipulates obligations for users of high-risk AI systems. Here, users refer to entities, public institutions, organizations, and other bodies that use the AI system based on their own authority while excluding users performing non-professional private activities. Users of high-risk AI systems have an obligation to be vigilant about the potential for AI to infringe on fundamental human rights and to ensure the data used in AI systems is relevant to its intended purpose. In addition, users are obligated to stop using the AI system if it poses a risk to human health, safety, or human rights, and they must notify the AI provider or distributor of this issue. Furthermore, users are required to retain operational records of the high-risk AI system for a designated period to use them for post facto diagnosis to find any causes of human rights violations in AI systems.

The significance of this legislation lies in its detailed regulations concerning AI technology, and it is noteworthy that the obligations of not only AI developers but also users have been clearly defined. This legislation appears to have codified the discussions held in the international community, such as those by the OECD and UNESCO, and it is expected to serve as a reference for future AI-related laws in various countries.

3-2) US Algorithmic Accountability Act, Executive Order on AI

In fact, the US AI policy has been more flexible in its approach to AI regulation than the EU, focusing on supporting AI development on the national level for industrial development and innovation. However, as AI-related issues have continued to arise, the Algorithmic Accountability Act was first introduced in April 2019, which mandates that impact assessments for decision-making systems and privacy be conducted in advance, exclusively for high-risk automated decision-making systems*. This comes as authorities impose an obligation on companies to assess these risks in advance amid persistent concerns regarding privacy violations and bias/errors.

*As any system, software, or process that uses computing and whose outcomes serve as the basis for decisions or judgments. Including those derived from machine learning, statistics, or other data processing or artificial intelligence technologies, but excluding passive computing infrastructure.

However, as automated decision-making systems are increasingly used in more areas, the Algorithmic Accountability Act, which was simultaneously proposed for amendment in both the House and Senate in 2022, has been significantly expanded in scope. It appears that impact assessments must be conducted for both automated decision-making systems and enhanced critical decision-making processes**. Critical decision-making processes refer to decisions that significantly impact a consumer’s life, such as education, infrastructure, healthcare, or finance. The impact assessment includes various aspects, such as past and present performance, measures to mitigate negative effects on consumers, and actions to enhance data privacy protection.

**A process, procedure, or other activity that uses automated decision-making systems to make significant decisions.

Another notable aspect is that, under this Act, if an AI system causes unreasonable discrimination or fails to comply with the requirement to conduct impact assessments regarding privacy violations, the relevant authorities can enforce the law. For instance, if citizens are threatened or disadvantaged by an AI system, the State Attorney General can file a lawsuit on behalf of the victims to seek legal remedy.

The key features of this Act include emphasizing the transparency and accountability of algorithms, imposing an obligation on companies to conduct impact assessments in advance, and allowing the state to file lawsuits on behalf of individuals who suffer unexpected damage from AI.

In addition, the United States issued an "Executive Order on the Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence" in October 2023. This executive order imposes stronger obligations on AI providers than the previous Algorithmic Accountability Act to prevent the abuse of AI technology. Specifically, companies that develop AI involved in important decision-making processes must pass a test against the “red team (mock enemy),” a government-organized verification expert team. Also, these companies are required to continuously report the consumer impact after the service is provided, and the government has an obligation to evaluate these reports. And this executive order directs the National Institute of Standards and Technology to develop standards and technologies that ensure the security and reliability of AI. At the same time, it aims to address copyright controversies and prevent the spread of misinformation by requiring AI-generated content to include identifiable watermarks.

Table 2. Global Key Policy/Legislation.

| Multilateral Conference |

AI Summit (November 2023) |

|

| International Organizations |

OECD AI Recommendations (May 2019) |

|

| UNESCO AI Ethics Recommendations (November 2019) |

|

|

| Major Countries' Act |

EU AI Act Passed (March 2024) |

|

| Introduction of the US Algorithm Accountability Act (October 2022) |

|

|

| US AI Executive Order (October 2023) |

|

In line with these global trends, there have recently been movements towards regulating AI in South Korea as well. The previously mentioned AI Copyright Guidelines issued by the Ministry of Culture, Sports, and Tourism stipulate the scope of copyright recognition for AI-generated works. Also, there is growing interest in the AI Basic Act proposed by the Ministry of Science and ICT, which has been pending for over a year in the plenary sessions of the standing committee. This Act prioritized the development of AI technology and adhered to the principle of "prior approval and post-regulation," making it challenging to be presented in the plenary session. However, with global trends, such as the passage of the EU AI Act and the announcement of the US executive order on AI, and the growing visibility of negative impacts associated with AI, there have been increasing calls for urgent legal and institutional support to enhance the national competitiveness of AI technology and to demonstrate responsible AI. In response, the government has announced its intention to remove that principle and work toward the passage of the act. In addition, the Korea Communications Commission announced in its business plan for March 2024 that it would promote the enactment of the “Act on the Protection of Users of Artificial Intelligence Services” and announced a plan to introduce a labeling system for AI-generated content and establish a dedicated reporting channel for addressing damages related to AI. Thus, South Korea is also gradually implementing regulations to mitigate the adverse effects of AI.

Implications of Global Policies/Legislation

It is noteworthy that the AI-related laws and recommendations from multilateral forums, international organizations, and major countries examined so far have something in common. First, the roles and responsibilities of AI developers have been strengthened. Developers are required to maintain the quality of AI systems at the highest level based on available technologies and information, and they must transparently disclose the processes of data collection and processing. Second, there has been an emphasis on the proactive detection and prevention of potential risks posed by AI. As AI rapidly develops, there is an emphasis on preventing risks since AI systems can violate fundamental human rights, such as equality and privacy. Third, there is an emphasis on copyright protection, as AI fundamentally learns from existing data to generate output. Finally, it is recommended that the issues caused by AI be addressed through international cooperation and responses at a global level since the scope of these problems generated by AI can be transnational.

Thus, in the process of developing and using AI, companies first need to consider mandating the formation of a red team to identify vulnerabilities in advance. The formation of a red team was also mandated in the US AI Executive Order, which was mentioned above. Besides testing with existing code, companies need to conduct mock tests in various ways, such as generating inputs for malicious prompts and models to ensure the systems are validated before their launch. For reference, Google has established an AI red team comprised of in-house experts to simulate attacks, while OpenAI is reportedly encouraging the involvement of people outside the company on its red team. By using various attack methods from the red team, it is necessary to meticulously verify, in advance, whether the system supports illegal activities, provides biased information and judgments, generates toxic content, or infringes on privacy, and to prevent these from occurring. It is also time to document the obligations of in-house developers by doing things like enhancing transparency through providing explanations of AI systems and to provide continuous training opportunities so that they can acquire the latest technologies in a timely manner. It should be noted that the current international responses to AI risks emphasize the obligations and responsibilities of AI developers. Third, measures should be taken to ensure copyright protection, such as attaching watermarks to content from generative AI and safeguarding the copyrights of works and news used for AI training. To this end, it is important to consider establishing internal guidelines for managing AI-generated content and using existing materials. Finally, it is crucial to continuously advance technologies and address vulnerabilities through various types of networks. In other words, we need to continuously enhance and develop AI application technologies through mutual collaboration between the private sector, academia, and government. Additionally, a system capable of reporting these issues directly to headquarters and conducting immediate analysis should be established for cases when unforeseen vulnerabilities are exposed after the launch of AI products based on various networks and processes.

The rapid advancement of AI technology presents both various risks and opportunities simultaneously. Although AI has the potential to offer substantial benefits for companies and countries, launching AI products or services that function incorrectly can lead to serious repercussions. It is time to make every effort to develop safe, reliable, and sustainable AI systems in advance based on the norms, laws, and recommendations presented by the international community.

References

[1] 과학기술정보통신부, 2023.11.7, 과기정통부, ‘인공지능 안정성 정상회의 참석 성과 발표’, 보도자료

[2] 김송옥, 2021. 11, AI 법제의 최신 동향과 과제 - 유럽연합(EU) 법제와의 비교를 중심으로, 「공법학연구」

[3] 김일우, 2024, 고위험 인공지능시스템의 차별에 관한 연구, 「서강법률논총」

[4] 김현경, 2022, UNESCO AI 윤리권고 쟁점 분석 및 국내법제 개선방향, 「글로벌법제전략」

[5] 노성호, 2023, 금융산업에서의 인공지능(AI) 활용 방안에 따른 리스크 요인 분석, 「자본시장연구원」

[6] 디지털데일리, 2024.2.14, AI 기본법 통과 필요성 재차 강조한 정부… 과방위에 쏠리는 눈길

[7] 디지털투데이, 2024.3.20, AI 기본법, 22대 국회 막판 통과할까?

[8] 매일경제, 2023.12.18, AI가 만든 그림·소설 저작권 불인정… 저작권 등록 규정에 명시

[9] 방송통신위원회, 2024.3.21, 2024년 주요업무 추진계획

[10] 아시아경제, 2023.12.28, AI 저작권 첫 가이드라인… “적절한 보상”vs “경쟁 뒤처져”

[11] 오성탁, 2020 1/2월, OECD 인공지능 권고안, 「TTA저널」

[12] 윤정현, 2023.12.6, 인공지능 안전에 관한 블레츨리 정상회의의 의미와 시사점 「국가안보전략연구원」

[13] 파이낸셜뉴스, 2024.3.21,‘AI 생성물 표시 의무화’… AI 이용자 보호법 제정 추진

[14] 한국경제, 2024. 3. 22, AI 이용자 보호 법률 생긴다… AI 피해 신고 전담창구도 마련

[15] 홍석한 2023.4, 미국 “2022 알고리즘 책임법안”에 대한 고찰, 「미국헌법연구」

[16] EU, https://digital-strategy.ec.europa.eu/en/news

[17] Jacob Abernethy, March - April 2024, “Bring Human Values to AI”, 「Harvard Business Review」

[18] OECD, Recommendation of the Council on Artificial Intelligence

[19] UNESCO, Recommendation on the Ethics of Artificial Intelligence

▶ This content is protected by the Copyright Act and is owned by the author or creator.

▶ Secondary processing and commercial use of the content without the author/creator's permission is prohibited.