The Economics of AI: Cost Optimization Strategies for a Successful AI Business

Preface

The emergence of generative AI, sparked by the launch of ChatGPT at the end of 2022, has brought new business opportunities and ignited the imagination of many companies. Across various fields, there have been numerous attempts to revolutionize the customer experience using generative AI technology. Regardless of their size, companies are either launching new services by actively leveraging APIs of commercial models or by developing products using open-source models like Llama. New services such as Arc Search, Perplexity, and Liner have also emerged to offer novel interface experiences.

However, most AI companies struggle financially, failing to balance revenue and costs.[1] While failing to make successful business results, generative AI technology comes with a huge cost. For instance, Stability AI, which offers image generation services, is expected to incur approximately $96 million in annual costs while generating only about $60 million in revenue this year. This financial imbalance often leads to restructuring. Also, startups like Inflection AI, which launched a personal AI assistant chatbot, and Tome AI[2], which automatically generates presentations, face immense AI costs while earning minimal revenue, raising concerns about the sustainability of the generative AI businesses. The AI industry requires massive investments in server infrastructure, data acquisition, and recruiting advanced technical talent. So it's easy to find domestic startups struggling with operational challenges before achieving concrete business results.[3]

Companies increasingly fail since they don’t consider budget costs seriously when revenue projections are uncertain. Generative AI can incur costs that exceed expectations depending on the adoption method, user base, and scale of interactions. Therefore, the costs should be the primary consideration when assessing the sustainability of an AI business from the outset. It is crucial to manage the performance and costs of AI models through visible metrics from the beginning and to predict expenses in line with the pace of business growth. In addition, efforts, such as establishing spending guardrails, are necessary to control excessive spending. This article explores methods to calculate and manage costs to maintain a sustainable AI business.

Adopting AI Services Considering the Goals and Costs

The first essential step is understanding which AI service implementation method best suits your business. The service implementation methods using commercial AI solutions can be divided into three.

The first approach is using Closed Source Services by Third-Party Vendors. This involves turnkey AI solutions provided by AI companies, such as OpenAI's ChatGPT or Naver's HyperCLOVA X. These services offer guaranteed performance models packaged as managed services, allowing quick adoption. Also, robust customer support is their major characteristic. However, it is challenging to customize services to fit specific business needs as the AI service is used in a closed-source format. Additionally, if a business becomes locked into using this software, it may increase dependency on the vendor's technology.

The second approach is Third-Party Hosted Open Source Services. This involves using open-source models tailored to business needs but hosted by a third-party company. For instance, there are HuggingGPT, Lambda, and South Korean company Kakao's KoGPT. Unlike closed-source services, the structure and training methods of the models are available for all, allowing for flexible customization and development. However, the quality of the AI model can vary significantly depending on the open-source model and its application.

The third approach is preferred by organizations that opt for a Do-It-Yourself (DIY) method of building and deploying AI models. This involves using infrastructure and tools that hyperscalers provide, including AWS Sagemaker, GCP Vertex, and Azure AI, to develop in-house models. This method offers complete control over the model, but the quality, accuracy, performance, and development timeline depend on the organization's capabilities. While there is the option to self-develop and self-host workloads, developing an LLM from scratch is very expensive. Thus, this approach is typically excluded when discussing LLM-based services.

<Table 1> Pros and Cons of each AI Service

| AI service | Pros | Cons |

|---|---|---|

| Closed Source Services by Third-Party Vendors |

Rapid implementation High-quality models Consistent and reliable customer support Security advantage: Vendors ensure their models do not allow data breaches or unauthorized access (However, if the vendor's security is inadequate, there is a risk of severe reputation damage or fines under data protection laws) |

Limited customization options Vendor lock-in Bias/Privacy risks High cost due to proprietary technology High dependency on the vendor for AI technology updates |

| Third-Party Hosted Open-Source Services |

Flexible customization/control Compliance with privacy/security regulations Community support More economical than closed-source services |

Quality, accuracy, performance: User-managed/improved Requires a high level of technical expertise Time-consuming to achieve desired outcomes Inefficient support channels |

| DIY on Cloud Provider | Full control over the model Compliance with privacy/security regulations is possible Adjustable and manageable budget Easy to integrate with other cloud services |

Quality, accuracy, performance: User responsibility Requires significant expertise in AI and cloud management Long time to market |

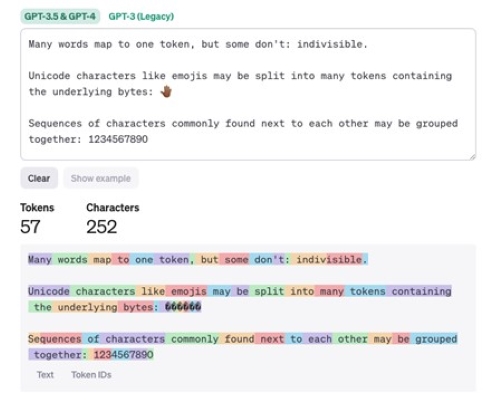

Let’s look at each cost factor of the three approaches considering a company's situation. First, the Closed Source Services are charged according to data volume. Costs depend on the number of words or symbols processed during input/output or the number of API calls. The method for calculating tokens varies by language, and the costs increase with usage. However, for companies with limited technical personnel, this model can be more economical in the long run and be suitable for companies lacking deployment capabilities. Also, due to the importance of auditing security and regulatory requirements, security protocols and certifications of closed-source services can be efficient for industries like finance and medicine.[4] Secondly, Third-Party Hosted Open-Source Services are significantly cheaper than Closed-Source Services. However, costs vary depending on how well the service is fine-tuned. Due to its broad scalability, it requires personnel with specialized knowledge. Considering the labor costs of such skilled professionals, this approach may need a larger budget for certain companies. The third approach, where a company hosts the service directly on its infrastructure (DIY), involves paying for the computing resources necessary to run AI models, especially GPUs. It may also involve licensing fees for LLM and require more time and skilled personnel[5] than other methods.

[Figure 1] Example of GPT Token Operation Method (Source: OpenAI)

[Figure 1] Example of GPT Token Operation Method (Source: OpenAI)

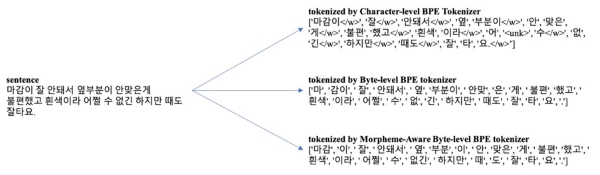

[Figure 2] Example of Token Calculation Method by Language (Source: OpenAI, Naver)

[Figure 2] Example of Token Calculation Method by Language (Source: OpenAI, Naver)

How to apply and use commercial AI is an essential consideration when choosing an AI model and predicting costs. It's important to identify in which area an AI model is required and which performance feature is needed, such as voice recognition, image processing, text summarization, and data analysis. The level of complexity should be factored into the budget calculation, whether it needs as simple as a website chatbot or requires a higher level of sophistication and intelligence.

Moreover, the method for AI service implementation is also crucial in model development. The total cost can be simply calculated using the formula “Service Usage * Usage Fee = Cost.” However, the specifics of usage and fees will vary depending on the type of service used (e.g., SaaS-AI, PaaS, IaaS), the model size, the type of application model based on tasks, token-based costs, and the duration of service usage. The cost calculation formula differs slightly depending on the pricing model and such factors.

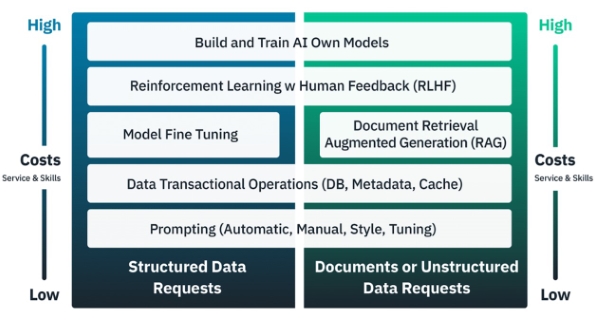

Prompt-tuning is one of the LLM training and development methods that affects the formula. This method can be flexibly optimized using manual, automated, and guardrail modes, and each mode can result in varying quantities and ratios. Examples provided in prompts may include zero-shot and few-shot prompts, depending on whether static or dynamic prompt inputs are provided. During model training, you can decide whether to train the model without giving any information or with information, which incurs costs in providing information. Retrieval-Augmented Generation (RAG) involves a series of steps including searching for facts, prompting based on factual data, and checking facts. Fine-tuning helps improve the model quality and reduce bias, iterations, and hallucinations across different stages, such as POC, development, and product operation. Reinforcement Learning with Human Feedback (RLHF) adjusts reward functions for specific outputs and the scale of human involvement over time. Training a model from scratch includes generating a custom model through training and iterations, considering trade-offs between reuse or improvement, and the costs associated with new training or quality/accuracy. Various methods for improving AI performance incur costs across all aspects of learning, including conversion and input/output handling arising during the learning process. Therefore, it's essential to determine the required level of performance and the corresponding learning methods. Once the learning method is selected, it is crucial to identify the cost factors involved, how much they are used, and the associated expenses to accurately predict overall expenditure. Although this process can be complex and cumbersome, every aspect is directly linked to costs.

[Figure 3] AI Model Development Stages and Costs (Source: FinOps Foundation[6])

[Figure 3] AI Model Development Stages and Costs (Source: FinOps Foundation[6])

Key Factors Affecting the Cost of LLM AI Services

Once you have selected an AI solution deployment method, it's crucial to understand the cost factors associated with each deployment solution and the scope of ownership and intellectual property rights for effective budget management.

Firstly, the primary cost driver for Closed Source Services by Third-Party Vendors is the usage of application APIs. Usage is measured by 'token in/out,' corresponding to the number of text words processed. Additional costs may arise when using plugins or other models. Since many commercially available LLM models are based on English, using Korean inputs may require more tokens. Therefore, it is important to confirm what data the commercial AI model primarily holds and how it tokenizes the relevant language. The base model incurs minimal training costs as it needs only prompt tuning. However, high-level fine-tuning causes significant costs. When it comes to labor costs, technical expertise is less required, compared to other systems, and most cases require PaaS-level management of Software Key User (SKU).

Secondly, the primary cost factors for Third-Party Hosted Open-Source Models are the usage or rental time of infrastructure GPU/RAM. Costs are incurred based on model training, data processing volume, and storage capacity. However, since a pre-trained model can be further trained, the overall cost is moderate among the three options. Achieving optimal performance may require significant development costs and skilled personnel, and additional SKU costs may arise for elements such as models, data, and API calls. This can lead to the risk of budget overruns, requiring careful management of computing resources. Moreover, more specialized personnel and technical expertise are needed for customization, compared to Closed-Source Services.

Thirdly, in the DIY approach using cloud systems, the primary cost drivers are the usage or rental time of infrastructure GPU/RAM. This method requires a high level of diverse technical expertise, as you need to manage Software Key Users (SKUs) for all elements, including hardware, software, modeling, model training, APIs, and licenses. While there is the greatest degree of freedom in managing model development costs, the costs can vary significantly depending on the model's performance and use case since the model must be trained from scratch. However, choosing this approach provides complete control over all aspects of the AI system and full ownership and control over data and intellectual property. By identifying and understanding these cost drivers, more informed decisions can be made to align with the project's scope, technical requirements, and budget.

A strategy is needed to reduce costs associated with AI in line with the direction of LLM model usage. To develop this strategy, it's crucial to plan the AI system deployment strategy while considering the impact on existing spending commitments and capacity. According to FinOps, when using Closed-source AI services by third-party vendors, as demand and operational costs increase, the pricing structure can fluctuate and potentially rise.[6] The cost of the latest versions of LLM/plugins can be 5-20 times higher than previous versions and context lengths, so making wise decisions based on the workload is necessary. Capacity commitment discounts vary depending on time, amount, and duration and should be examined in detail according to existing pricing agreements. Training and fine-tuning costs for Third-Party Hosted Open-Source models for POC have rapidly decreased. Also, hardware prices have stabilized for small- to medium-scale deployments, but in large-scale deployments, GPU resources are still limited, requiring capacity assurance. Moreover, the unit cost for the latest CPU/GPU/TPU generations is higher when considering the same GB RAM/core unit ratios as previous generations. Using serverless and GPU-managed middleware services in a DIY cloud system approach can help reduce costs and minimize the required technical expertise and effort. By leveraging pre-built model templates and recommended computer optimization guidelines, organizations can lower basic costs and avoid unnecessary expenses, thereby reducing the total cost.

There are also important considerations regarding the deployment method. The cost difference between the most expensive major vendors and fully optimized platforms can range from 30 to 200 times. Enterprises especially need to think carefully about this significant cost disparity. A technology like input prompt optimization simplifies input and can lead to a 15-25% cost reduction. Furthermore, optimizing technology, platforms, configurations, scalability, and purchasing methods together has resulted in greater cost savings than using a single strategy alone. As automation continues to advance, there are additional opportunities to reduce costs associated with fine-tuning, synthetic data generation, and RAG functionalities.

Accurately Predicting and Controlling Costs in AI Service Operations

Various technical layers and components of AI-based products and services can significantly impact costs. When using commercial AI models like ChatGPT or Claude, costs are typically calculated based on the total number of API requests. In contrast, when deploying AI services based on open-source models via cloud systems, costs must be calculated considering GPU usage, RAM, storage, and data processing costs per unit provided. Engineering teams must evaluate the costs and performance across different engineering layers, depending on the deployment options and develop efficient strategies for building and allocating resources.

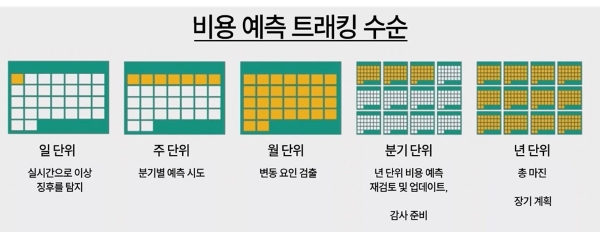

For more accurate AI cost predictions, it is advisable to create long-term plans based on the traffic capacity of AI models used by various business teams across the organization. Estimating AI costs is just a projection and should be regularly monitored and adjusted based on the actual expenditures. Continuously tracking technological changes and AI vendor pricing policies also aids in making more accurate cost predictions.

To enhance the efficiency of AI system operations and manage costs effectively, it's essential to establish guardrails. These guardrails help align the organization's vision with product goals and mitigate risks. Organizations can create an environment where costs are better predicted and controlled by setting guardrails considering budget limits, technical specifications, relationships with AI vendors, security and compliance, and operational metrics. The table below can serve as a reference for establishing guardrails to manage AI costs effectively:

<Table 2> Example of Scopes and Tasks for Cost Guardrails

| Scope | Tasks |

|---|---|

| Budget |

Fix the amount spent or the number of API operations for POC. Send real-time alerts when the set threshold is approached or exceeded. Distribute reports containing predicted costs, actual costs, and quantities. |

| Technology | Select the appropriate deployment model and control usage. Implement pre-approval and strict authorization settings. Scale AI workloads carefully (GPUs can be very expensive). |

| Vendor Relationship |

Assess the dependency on and potential lock-in with vendors. Ensure sufficient trust in future strategies. |

| Security and Compliance | Manage role-based data-sharing violations and permissions. Conduct training for AI providers to protect the company's sensitive data. |

| Testing and Operations |

Monitor usage and costs against set thresholds. Build pipelines to handle ethical issues such as bias and safety. Track performance metrics such as accuracy, latency, and speed. Strengthen cost management and optimization policies. |

Ultimately, defining the success of AI services and determining the best way to achieve it requires making cost estimation an objective metric and working to control it while striving for success. AI services are evaluated based on the processing speed, accuracy, and response quality. Effectively managing costs per task, GPU costs, and other expenses is essential to balancing performance and cost. It is necessary to set engineering goals, such as improving speed and accuracy, reducing training costs, and lowering the cost per token. It is crucial to set appropriate engineering goals for the various factors that affect AI services and for team members to work towards achieving them.

Conclusion

AI technology continuously evolves with new updates added daily, enhancing the potential of all industries. However, as the economy worsens, AI companies are forced to demonstrate sustainability from the early stages of their business. In a still-maturing AI field, proving the viability of self-sustained growth is essential for securing additional investment. This situation is often tied to the practices of many startups, which may overestimate business outcomes or underestimate costs.

Proactively considering the strategies and costs needed to create and operate AI products in the early stages of the business is crucial for reducing risks and enhancing the chances of survival. Companies that fail to achieve tangible growth may be forced out of the market, yet investment in AI is expected to continue expanding. To build a robust business model that can withstand competitive market conditions and technological dependencies, it is essential to maintain technological agility while effectively controlling costs and responding proactively to changes.

References

[1] Samsung SDS Insight Report, "2023 Domestic AI Adoption and Utilization Survey," Dec 08, 2023.

[2] Deloitte, “TrustID Generative AI Analysis”, August 2023.

[3] McKinsey, “Why AI-enabled customer service is key to scaling telco personalization”, Oct 24, 2023.

[4] Businesswire, “86 Percent of Consumers Will Leave a Brand They Trusted After Only Two Poor Customer Experiences”, Feb 02, 2022.

[5] HelloT, AI Utilization in the Telecom Industry Expected to Increase Tenfold by 2032, Feb 12, 2024.

[6] McKinsey, “How AI is helping revolutionize telco service operations”, Feb 25, 2022.

[7] The Economist, “Why fintech won't kill banks”, Jul 17, 2015.

[8] Deloitte Insights, "The Evolving Banking Industry in the Age of AI," Aug 2023.

[9] Tech42, "'Internet Banks' on the Rise? No.1 KakaoBank and No.2 Toss Rank as Top Banking Apps," Nov 07, 2022.

[10] The Economist, "Hoping for a Fintech as Profitable as Samsung Electronics Overseas," Oct 04, 2023.

[11] ZDnet Korea, "Shinhan Bank Creates Small Business Ecosystem with Delivery App 'DDangyo'," Oct 19, 2023.

[12] Voicebot, “Bank of America’s AI Assistant Erica Passes 1.5 Billion Interactions”, Oct 19, 2023.

[13] Korea Economic Daily, "Is Fintech Only for Gen-Z? Senior Users Also Represent Key Customers," Jun 14, 2022.

▶ This content is protected by the Copyright Act and is owned by the author or creator.

▶ Secondary processing and commercial use of the content without the author/creator's permission is prohibited.

Senior Program Manager at SAP France

Majored in computer science in Korea and worked as a developer in LG and Fujitsu Korea for 7 years,

Moved to Paris, France in 1998 and worked as a development manager and program manager at Business Objects, and is working as a product/program manager in Engineering UX Division at SAP.

- Global Trends and Implications in AI Risk Management

- Copilot: The Key to Hyperautomation

- Case Study: Samsung SDS Uses Retrieval Augmented Generation for Kubernetes Troubleshooting

- Global Tech Trends From Big Tech’s Keynotes

- Survival Strategies in the Meta World Transformed by AI

- Enterprise Readiness for Generative AI