Multi-node GPU Cluster

Multiple GPUs for Large-scale, High-performance AI computing

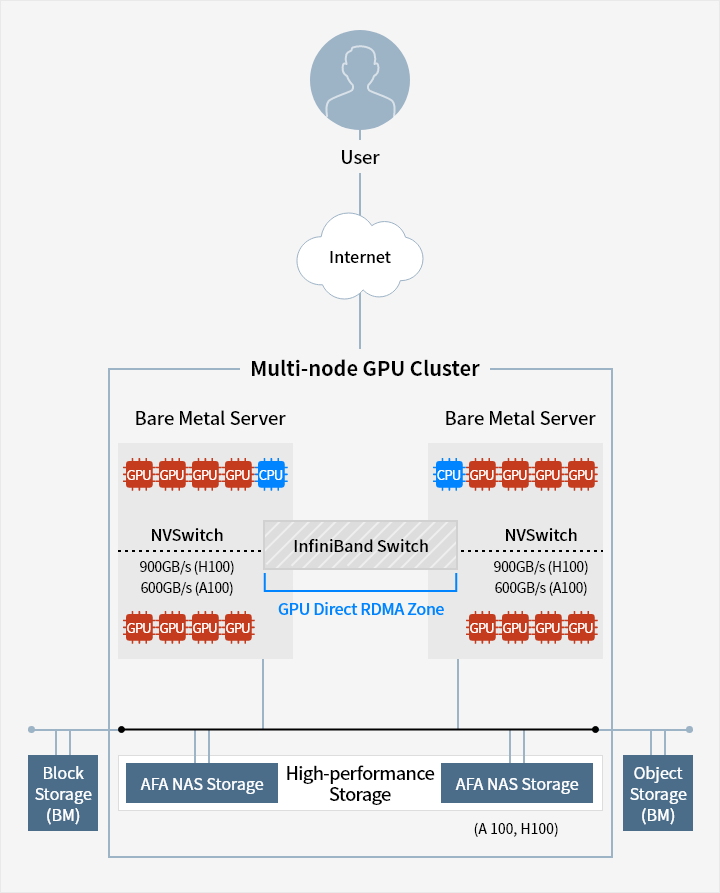

Bare Metal Server with GPU supports the clustering of multiple GPUs. Users can access GPU servers with ease by integrating with high-performance storage and networking products on Samsung Cloud Platform.

Overview

-

Easy-to-use GPU Architecture

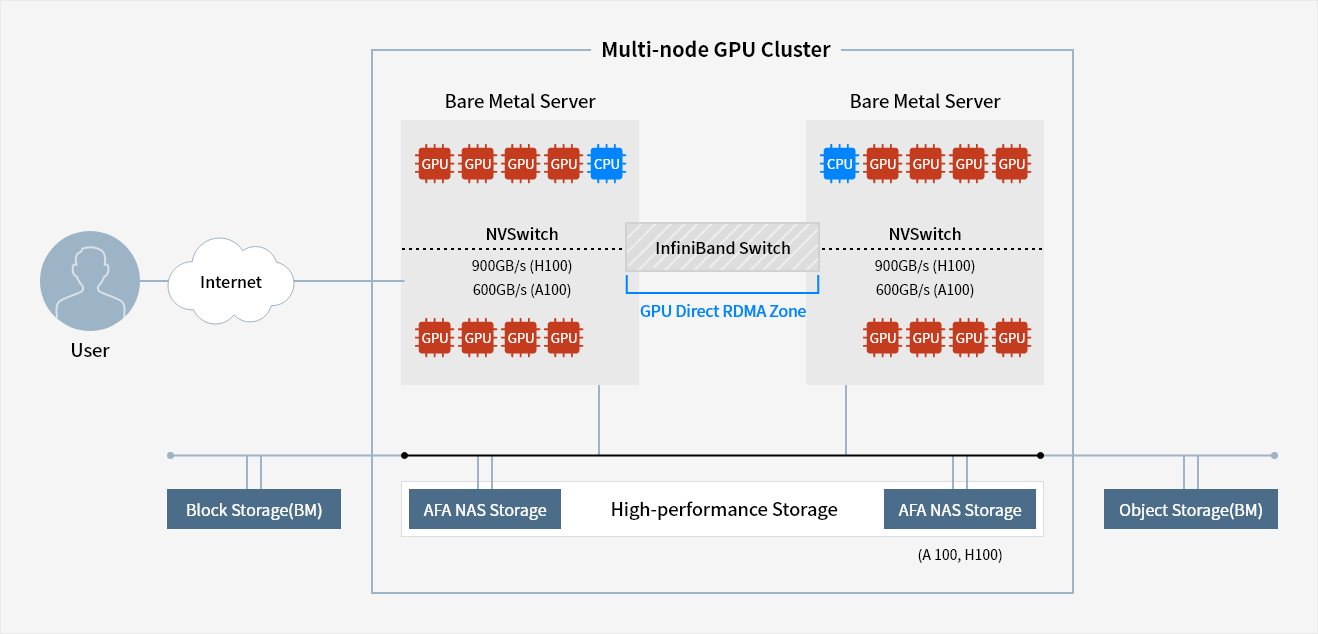

Multi-node GPU Cluster uses Bare Metal Server, which is embedded with highperformance NVIDIA SuperPOD architecture. Using the GPU, it handles multiple user jobs or high-performance distributed workload of large-scale AI model learning.

-

Integration with High-performance Network

Through integration with the network resources of Samsung Cloud Platform, Multinode GPU Cluster can handle high-performance AI jobs. By configuring GPU direct RDMA (Remote Direct Memory Address) using InfiniBand switch, it directly processes data IO between GPU memories, enabling high-speed AI/Machine learning computation.

-

Integration with High-performance Storage

Multi-node GPU Cluster supports integration with various storage resources on the Samsung Cloud Platform. A high-performance SSD NAS File Storage is available directly integrated with high-speed networks, and integration with Block Storage and Object Storage is possible.

Service Architecture

NVSwitch

900GB/s (H100)

600GB/s (A100)

NVSwitch

900GB/s (H100)

600GB/s (A100)

Key Features

-

Create/manage GPU Bare Metal Server

- Standard GPU Bare Metal Server with 8 NVIDIA GPUs

※ Internal NVMe disk, NVIDIA NVSwitch and NVIDIA NVLink - Provide OS standard image of RDMA SW Stack (OS : Ubuntu)

- Standard GPU Bare Metal Server with 8 NVIDIA GPUs

-

High performance processing

- Configure GPU direct RDMA environment using InfiniBand switch

- Provide high-performance SSD File Storage (A100, H100)

-

Storage and network integration

- Provide additional storage and network connection (Block, Object) on top of an OS disk

- Integration setting for subnet/IP and VPC Firewall

-

- Billing

- Usage-based : Hourly billing based on the time allocated after requesting for resources

- Commitment-based : Different discount rates applied based on contract years (1 year or 3 years). Monthly rates based on resource type

※ Penalty is charged for early termination within the contract period

Whether you’re looking for a specific business solution or just need some questions answered, we’re here to help