CloudML Pipeline

Manages the Learning and Execution History of AI Analytics Model in Pipeline

Overview

-

Easy Lifecycle Analysis

CloudML Pipeline provides a pipeline that can register Jupyter Notebook and workflow modeler as steps. Its pipeline can also be configured based on the planned intention of the AI model developer(analyst) and it enables optimal analysis jobs for each lifecycle of the AI model.

-

Efficient Learning Execution Process

Users can define arguments for resource setting and machine learning execution of each step. This allows the execution of various types of learning by setting execution option that fits each AI model feature. Additionally, shape analysis of the pipeline helps gain a better understating of each data conversion step as well as enhance the accuracy of predicting the outcome of learning execution.

-

Real-time Monitoring

Log monitoring is provided in real-time during AI model learning, giving users an at-a-glance view of the experiment indicators of each step and allowing them to track learning history.

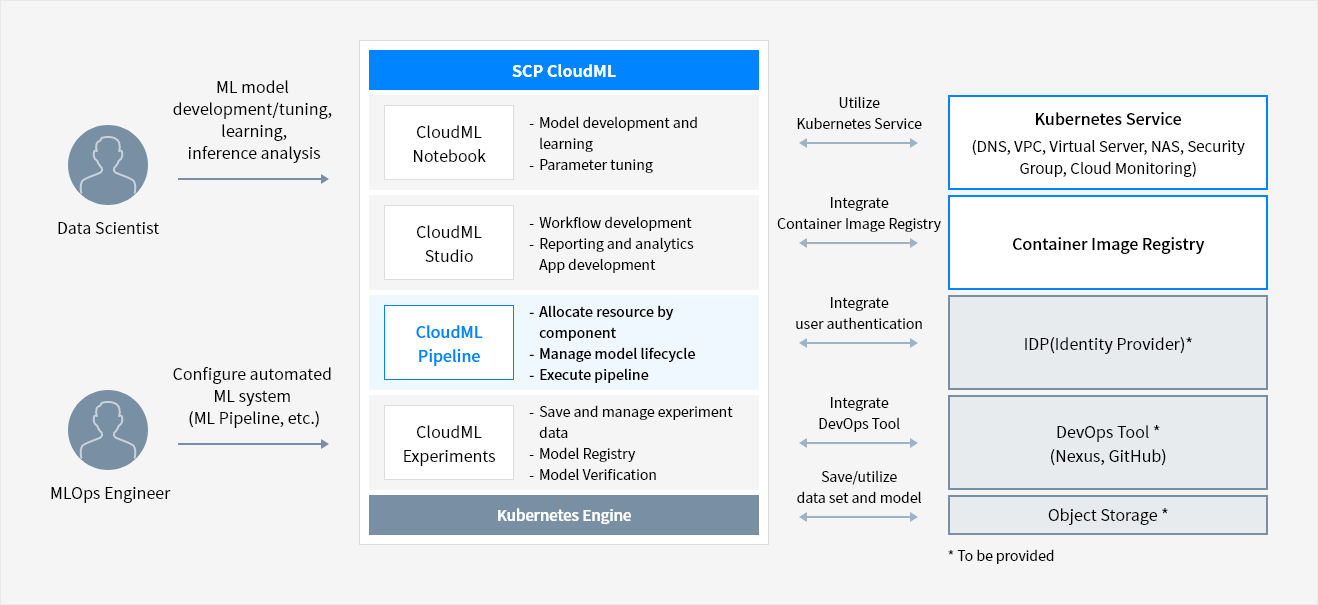

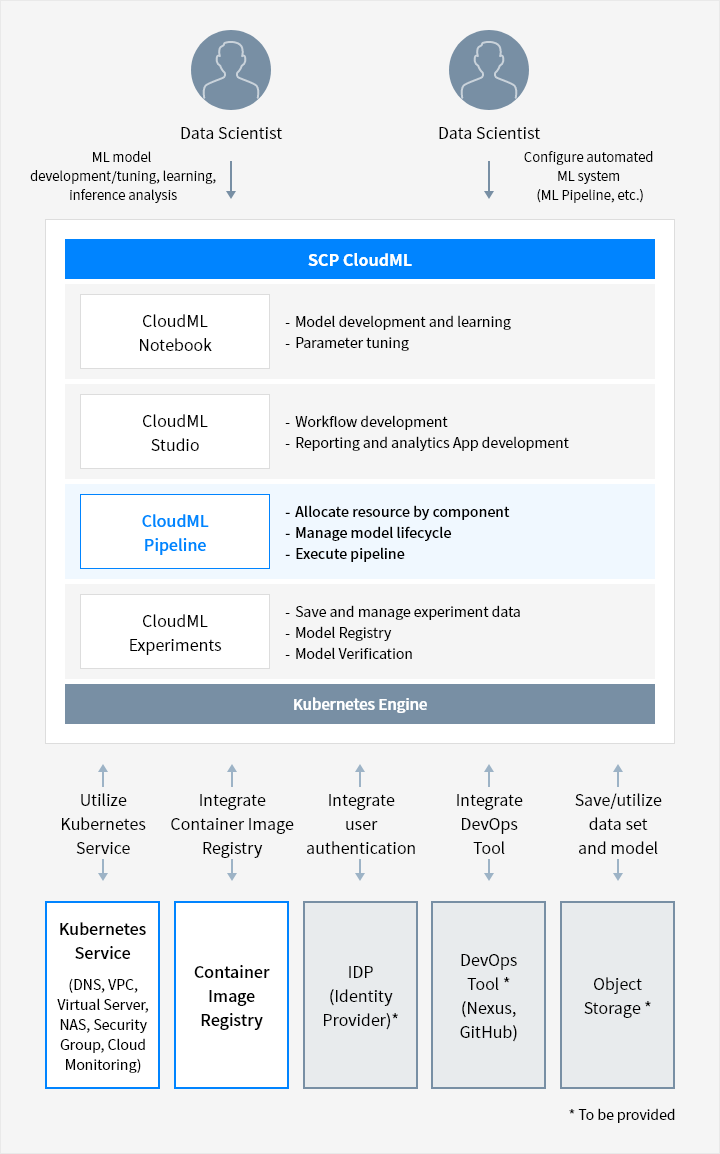

Service Architecture

- SCP CloudML

- CloudML Notebook: Model development and learning, Parameter tuning

- CloudML Studio: Workflow development, Reporting and analytics App development

- CloudML Pipeline: Allocate resource by component, Manage model lifecycle, Execute pipeline

- CloudML Experiments: Save and manage experiment data, Model Registry, Model Verification

- Kubernetes Engine

- Data Scientist → ML model development/tuning, learning, inference analysis → SCP CloudML(CloudML Notebook) ← Utilize Kubernetes Service → Kubernetes Service (DNS, VPC, Virtual Server, NAS, Security Group, Cloud Monitoring)

- Data Scientist → ML model development/tuning, learning, inference analysis → SCP CloudML(CloudML Studio) ← Integrate Container Image Registry → Container Image Registry

- Data Scientist → ML model development/tuning, learning, inference analysis → SCP CloudML(CloudML Pipeline) ← Integrate user authentication → IDP(Identity Provider)*

- MLOps Engineer → ML model development/tuning, learning, inference analysis → SCP CloudML(CloudML Experiments) ← Integrate DevOps Tool → DevOps Tool* (Nexus, GitHub)

- MLOps Engineer → ML model development/tuning, learning, inference analysis → SCP CloudML(Kubernetes Engine) ← Save/utilize data set and model → Object Storage*

Key Features

-

Pipeline management and tracking

- Monitoring execution history: Check the status and execution history by step

- Real-time monitoring of execution log: Monitor in real-time cumulative log files during step execution

- Real-time monitoring of experiment metric: Visualize and monitor experiment metrics (accuracy, loss, etc.) real-time

※ Real-time monitoring is applied during the service application stage of CloudML Experiments -

Integrated management of UI based workflow model

- Python model integration feature

- Define each execution step in the pipeline : Creation, analysis/step, option/argument

- Allocate resource by step and provide an option to select execution image

Pricing

-

- Billing

- Container pod usage time of Kubernetes Engine occupied by CloudML Pipeline (vCore/hr)

Whether you’re looking for a specific business solution or just need some questions answered, we’re here to help