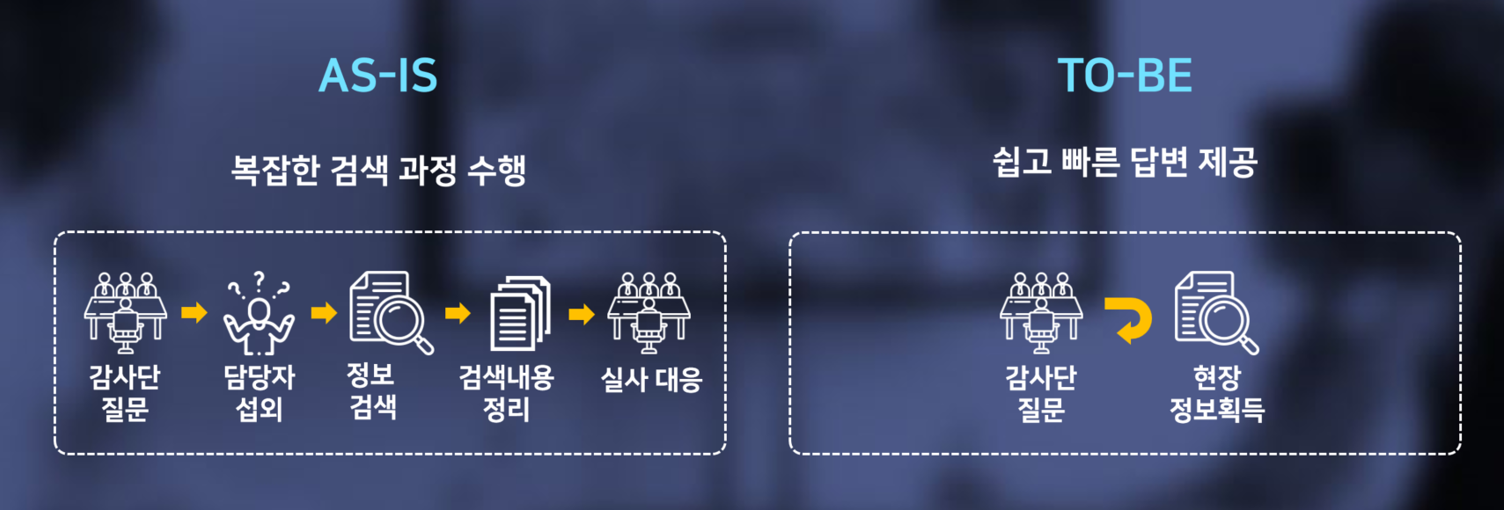

- 감사단 질문

- 담당자 섭외

- 정보검색

- 검색내용 정리

- 실사 대응

Case Study: Samsung Biologics Automates Generative AI-Based Works

- This article is based on the session titled "Generative AI-Based Workflow Automation" by Seongil Cho, Vice President and Team Leader of Samsung Biologics, presented during the "Brity Automation Summit 2024," a conference hosted by Samsung SDS for enterprise customers in April 2024. -

Since the launch of the GPT-3.5 model in November 2022, various Large Language Models (LLMs) have been released, including Gemini 1.0 Pro, GPT-4 Turbo, Claude 2.1, and Gemini 1.5 Pro from global big tech companies, as well as the domestic model, Solar by Upstage. When GPT-3.5 was first introduced, there was skepticism about its immediate application to corporate workflow due to issues like hallucinations. However, as we began using GPT-4.0, this perspective started to change. Now, generative AI evolves so rapidly — showing significant growth within just three months — that the changes and innovations it will bring are almost daunting. In a world driven by IT technology, a biopharmaceutical company, Samsung Biologics, recognized the urgency of embracing IT and generative AI-based innovations to avoid falling behind. However, making the decision to adopt generative AI was not easy.

Reflecting on decades of IT experience, from the "2004 SNS revolution (Facebook) to cloud computing (AWS), smartphones (app ecosystem), autonomous driving, remote work, and generative AI," it became clear that quickly experiencing and adapting to these evolving technologies is crucial. This technological evolution is closely linked with AI. Building in the app ecosystem of smartphones, the idea of an app store for GPT emerged, leading to OpenAI's "GPT Store (GPTs)" being launched. Major tech companies like Microsoft, Google, and Amazon are rapidly integrating AI into their platforms, leveraging their vast data, platforms, and cloud infrastructures. As a result, generative AI is spreading at an astonishing pace. With a humble approach toward new technologies, it is essential not only to recognize the significance of AI but also to quickly experience and adapt to generative AI technologies.

However, driving innovation is no easy task for non-IT companies with limited resources. The key here lies in fostering an AI culture within the organization, encouraging all employees to engage with the technology and participate in the ongoing transformation. Secondly, innovation should be pursued through technical collaboration with IT specialists. Companies need to understand data preparation and generative AI from a domain perspective, while IT specialists like Samsung SDS should lead in preparing platforms for applying generative AI, including fine-tuning, RAG*, and vectorization, and implementing actual generative AI technologies.

* RAG: Retrieval Augmented Generation

Samsung Biologics is…

Established in 2011, Samsung Biologics (SBL) has seen rapid growth, with an average annual increase of 20-30%, making it the fourth-largest company by market capitalizationin South Korea.

The company partners with over 110 customers, including major pharmaceutical companies like Pfizer and Bristol-Myers Squibb (BMS), and boasts the world's largest production capacity at 600,000 liters. Samsung Biologics also has received more than 265 approvals from global regulatory agencies such as the FDA* and EMA*. Samsung Biologics provides end-to-end services for biopharmaceuticals, from early development to clinical and commercial production. It is not just simple chemicals; this involves complex processes such as cultivating cells, purifying them for use, aseptically filling them into vials, ensuring safety through quality analysis, and obtaining final approvals from regulatory agencies. These processes are crucial in the biopharmaceutical industry.

* FDA : Food and Drug Administration

* EMA : European Medicines Agency

Development and production process of biopharmaceuticals (Source: Samsung Biologics)

Development and production process of biopharmaceuticals (Source: Samsung Biologics)

-

- 원료의약품 생산*

- 배양

- 정제

-

- 완제의약품 생산*

- 무균 충전

-

- 분석

- 품질 분석

- 안정성 연구

-

- 규제기관 승인

- 임상 및 판매

- 승인 지원

Pain Points

In the biopharmaceutical industry, there are numerous SOPs* that must be strictly followed in accordance with the prescribed content and procedures. Unlike in IT or general manufacturing, where the final product quality is often the main focus, in biopharmaceuticals, even if the final product meets the quality standards, it cannot be used if procedures were violated, and all instances of procedural violations, including those affecting the product quality, must be meticulously handled. As a result, global regulatory agencies, such as the FDA and EMA, along with major customers, conduct regular inspections and audits to ensure that pharmaceutical manufacturers comply with SOPs and maintain the safety of their products. If issues are found, regulatory agencies may publicly issue a warning letter, which may halt the production or delay the product launch. Given that Samsung Biologics is run under the motto "Driven. For Life." and deals with our lives, any damage to customer trust can severely impact long-term business relationships.

While working, including during inspections by regulatory agencies or customers, employees at Samsung Biologics often spent significant amounts of time searching through internal documents, like PDFs or extensive databases, to find and verify information related to SOPs*, deviations*, CAPA*, or CC*. With Inspection & Audit Support using Generative AI, there was a need for rapid and accurate responses and increased employee productivity to quickly address customer demands.

* SOP (Standard Operating Procedure): A document that outlines the standard operating procedures for pharmaceutical processes.

* Deviation: Variations that the SOP does not permit.

* CAPA (Corrective and Preventive Actions): Preventive actions.

* CC (Change Control): Management of changes.

Pain points from response to inspection of regulators and customers (Source: Samsung Biologics)

Pain points from response to inspection of regulators and customers (Source: Samsung Biologics)

- 감사단 질문

- 현장 정보획득

Generative AI PoC using Brity Automation

Samsung Biologics undertook a PoC project in collaboration with Samsung SDS by using generative AI to enhance on-site response capabilities to queries from regulatory agencies and customer audit teams.

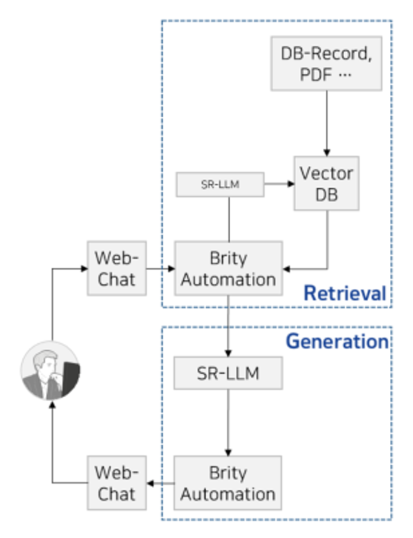

The PoC was conducted using Samsung SDS's business automation solution, Brity Automation* to design task-specific processes and implemented functionalities using Brity Web-Chat* for a user communication channel, Samsung's generative AI model (SR-LLM), and RAG development. This setup allows the system to respond to general inquiries similarly to ChatGPT while also providing domain-specific translation capabilities by referencing a predefined glossary through Brity Automation.

To briefly explain the core process of the Generative AI PoC project, when a user submits a query through Web-Chat, it is sent to Brity Automation, and Brity Automation searches for answers in a vector DB that has embedded and indexed data from Samsung Biologics' databases and PDFs. The search results, along with their sources, are added to the prompt, and the LLM generates the final answer, which is then delivered back to the user via Brity Web-Chat through Brity Automation.

* Brity Automation: A business automation solution specialized in implementing hyper-automation for enterprise operations. It enables UI-based automation design and API-based workflow execution and supports knowledge search and chat services using LLM.

* Brity Web-Chat: Acts as a channel for users to interact with generative AI. This is an asset acquired by Samsung SDS through the delivery of multiple chatbots and used in building chatbots for Samsung Group and internal solutions/systems (received the Grand Prize at the Web Award Korea in December 2022).

The core process for PoC (Source: Samsung SDS)

The core process for PoC (Source: Samsung SDS)

The key generative AI technologies include Retrieval Augmented Generation (RAG) and Text-to-SQL. We will provide a detailed explanation of the two tasks where these technologies were applied.

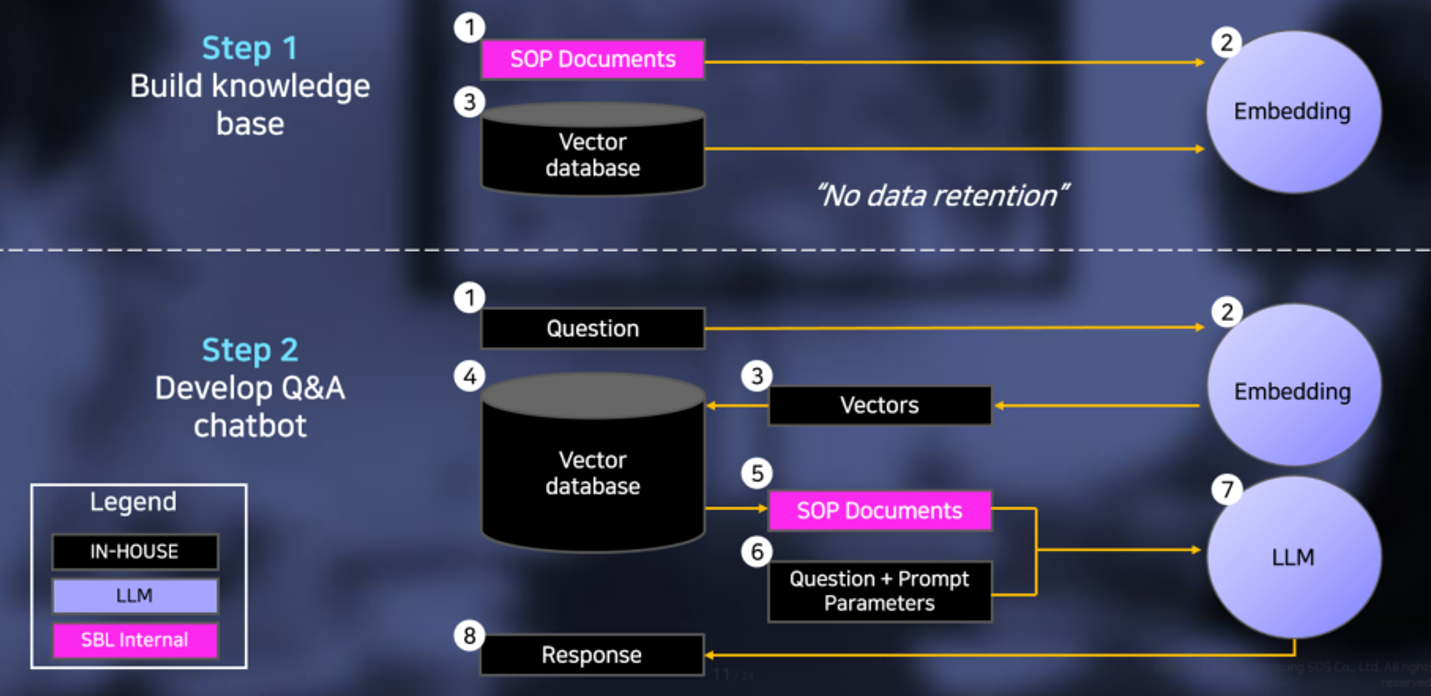

1) GenAI SOP Q&A (RAG applied, Q&A for SOPs including complex tables)

Since LLMs have a data cutoff point in their training, they cannot reflect the most recent data. However, when a user asks a question, the system can find sentences from documents that contain the answer, add those sentences to the prompt, and then query the LLM. The LLM will then generate a response based on the reference sentences. This technique is known as RAG.

Samsung Biologics developed RAG to find the answers from SOP documents, including complex tables and images. Since internal company data is not pre-trained in LLMs, the first step was to embed the PDF-formatted SOP documents into a Vector DB, a process known as 'Build knowledge base.' The preprocessing step of loading document data and dividing it into smaller units requires a lot of experience. Issues can arise when tables span across multiple pages, contain nested tables, or have merged cells, making it challenging to manage objects within the document. When a table is included within the text, it often acts as noise, so the first step was to separate the text and tables in the SOP documents. The text was divided into passages based on a predefined chunk size, while the separated tables underwent additional preprocessing before being organized into passages. Each passage was then annotated with metadata (document name, passage number, page number, etc.) and stored in both a relational DB and a vector DB.

GenAI SOP Q&A—Data Flow (Source: Samsung Biologics)

GenAI SOP Q&A—Data Flow (Source: Samsung Biologics)

Step 1 Build knowledge base

1 SOP Documents > 2 Embedding

3 Vector database > "No data retention" 2 Embedding

Step 2 Develop Q&A chatbot

1 Question > 2 Embedding

3 Vector database > 2 Embedding > 3 Vectors > 4 Vector database > 5 SOP Documents, 6 Question + prompt Parameters > 7 LLM > Response

- Legend

- IN-HOUSE

- LLM

- SBL Internal

Next, we implemented the Develop Q&A Chatbot system, which generates final answers using LLM after retrieving relevant passages from the Vector DB based on user queries.

When a user asks a question through the chatbot, the system first translates the question into English, since the Samsung LLM (SR-LLM) produces better responses in English than in Korean. It then searches the Samsung Biologics' glossary to convert any abbreviations into full terms, refining the question into a form that the LLM can best respond to. The modified question is converted into a vector, and a similarity search is conducted with passages stored in a vector DB to select the top 20 candidates. This search applies two models, MP-Net* and DPR*, to find passages based on similarity and then selects the intersection of these passages. This search technique maintains performance while reducing the number of LLM calls by 50%, and Samsung SDS filed a patent for this in January 2024.

Afterward, the candidate passages are used to construct the content to be sent to the LLM. The retrieved passages may be similar to the question, but the actual answers are often found before or after these passages. Therefore, to make the most of the maximum token count of 8K that can be sent to the LLM in a single transmission, we add six passages before and after the retrieved passage through multiple tests. In cases where the passages are in the table format, only the table itself is sent to the LLM. This method was also patented in January 2024. To ensure that the LLM generates accurate responses, a prompt is crafted by combining the LLM role, objective, and constraints, such as instructing the model to respond only using the given passages, along with the user query and the previously prepared candidate passage content.

Since there is no single correct way to create these prompts, optimizing them requires extensive experience and iterative refinement. Each of the 20 candidate passages is sequentially sent to the LLM as prompts. Items without responses will be excluded, and the process will be repeated until the desired number of responses is obtained, and the final responses are then generated and provided along with the corresponding document names and page numbers as supporting evidence.

* MP-Net (Masked and Permuted Pre-Training for Language Understanding): Traditionally, the method used was to find an answer in the FAQ by searching for a question similar to the user's query and providing the corresponding response.

* DPR (Dense Passage Retriever): Indexes all passages in a low-dimensional continuous space, efficiently retrieving the top k passages related to a user's input question at runtime.

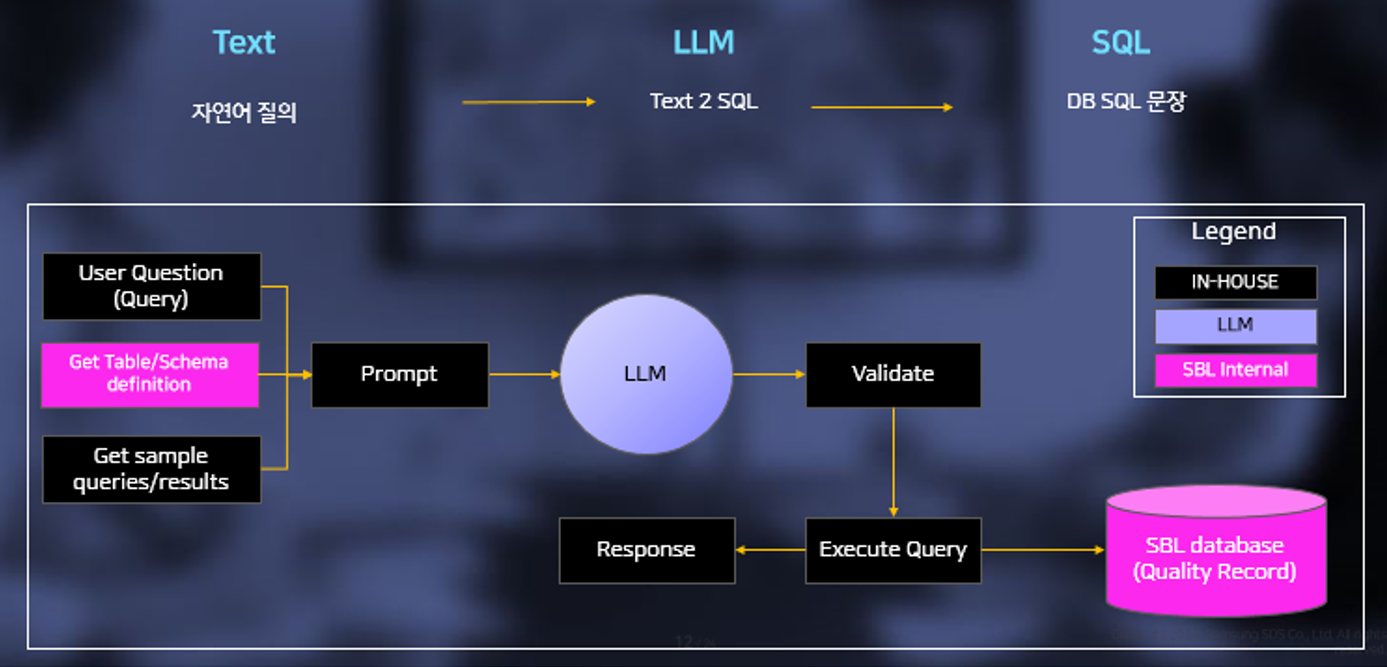

2) GenAI Quality Record Q&A (Text-to-SQL Application)

This has a quality database that records corrective/preventive actions related to defects labeled as deviation. The PoC was conducted on three tables of the quality database, applying Text-to-SQL* technology using LLM for user questions — a method gaining interest as it allows SQL non-experts to access and extract data from relational databases without additional training. The questions were converted to SQL, and the results retrieved from the database were provided in the table format. Also, since the database records quality activities, questions related to columns (fields) like "Detailed Description of the Issue" or "Comments from the Responsible Person" cannot be answered solely using SQL, so RAG technology was also applied.

* Text-to-SQL (T2S): A technology that automatically converts natural language text input by users into Structured Query Language (SQL).

First, the database data is refined, and columns where users input text, such as descriptions, are embedded into a Vector DB for the RAG application. When a user queries the database, the input is translated into English through LLM, and prompts are created to help the LLM understand the database structure and generate accurate SQL. The prompt includes the SQL CREATE TABLE to inform the LLM of the internal database table structure and sample data of the actual stored records. Additionally, the LLM is assigned the role of an SQL expert, and if it can generate SQL based on the user's query, it is instructed to do so. By using Brity Automation's UI-based workflow, validation of the generated SQL is developed quickly, and ultimately, the validated SQL is used to query the internal quality database to collect data. If there are multiple results for the quality activities, they are formatted into a table for the response. If there are no results from the SQL execution or if the question pertains to a column entered by the user, the same process as SOP Q&A (RAG) will be followed, performing a vector similarity search and then sending the results to the LLM to generate the final answer.

GenAI Quality Record Q&A—Data Flow (Source: Samsung Biologics)

GenAI Quality Record Q&A—Data Flow (Source: Samsung Biologics)

Text : 자연어 질의 > LLM : Text 2 SQL > SQL : DB SQL 문장

- Legend

- IN-HOUSE

- LLM

- SBL Internal

For these PoC tasks, we developed and applied specific guidelines for prompt creation, which is crucial for effectively using LLMs. Also, by using Brity Automation, we quickly established integrated processes, such as classifying and branching based on user intent (query, Q&A, summary, etc.), setting parameters for SOP queries, RAG requests, and applying result formats according to the type of answer. This allowed us to integrate LLM and RAG technologies into existing processes seamlessly.

In January 2024, we launched a pilot on a dedicated environment with 8 A100 GPUs for 500 users, currently handling an average of about 1,500 LLM requests per day, supporting inspection and audit tasks. Going forward, we plan to expand the integration with internal systems company-wide by improving the UI/UX and HR.

The Benefits of Generative AI

It may seem like generative AI can handle all tasks effortlessly on platforms like YouTube. However, accurately extracting and delivering internal data while complying with a company's strict security protocols and enabling non-experts to query the database using natural language to access necessary information was not easy.

The SOP Q&A and summarization functions using generative AI have been applied to some SOPs, but there are many more SOPs, each ranging from dozens to hundreds of pages. Being able to find information within these SOP documents is highly valuable. In a situation where the number of operational personnel is significantly increasing, using this technology to train new staff allows for quicker onboarding and helps them learn accurate procedures, reducing the frequency of process violations and improving quality. We are also streamlining and standardizing procedures by comparing and analyzing redundant expressions and terms. By using quality DB data that records process issues, changes, actions, etc., even non-IT experts can easily and quickly access the DB data, reducing the time required to search quality records during regulatory inspections and improving workflows by analyzing the root causes of process issues. We have also achieved customer satisfaction and trust by providing logical and accurate data.

Lessons Learned

One key takeaway from the project is that the active involvement from all business teams is mandatory. Without their contribution in generating data, it's difficult for any project to succeed. While most attention is focused on generative AI technology, increasing internal data due to productivity gains can lead to a rise in non-standardized documents. For companies to effectively connect accurate E2E data, it's crucial first to structure and standardize the data, and RAG or prompt writing should reflect this standardized data structure or format. Such company data should be continuously analyzed to establish an ongoing standardization cycle.

In an environment where new technologies constantly emerge and changes happen rapidly, it's essential to have a generative AI technology partner that can connect various LLMs as endpoints and provide a solution/platform for user interaction. It is essential to continually refine the requirements through ongoing discussions with partners and to validate whether the necessary use cases for the company can be implemented. In this process, selecting the appropriate LLM, GPU, and infrastructure tailored to the company is crucial, and accuracy can be improved through RAG development and prompt optimization that considers the company's unique characteristics. Sharing a vision of innovation and mutually understanding the improvements that the project will bring are key success factors, and above all, the interest from the executives, including the CEO, and sponsors is absolutely required.

Afterword

Going forward, Samsung Biologics plans to establish a virtuous cycle by generating standardized data, organizing it for analysis, and leveraging it as fine-tuning data for E2E business integration. Additionally, the company intends to expand the application of generative AI across various business areas, including sales, marketing, HR, and finance.

Elon Musk once said, "Progress occurs through continuous change and improvement," while Jeff Bezos advised people, "Focus on what doesn't change." Although these statements seem contradictory, they can be understood similarly. Change and innovation are constantly viewed from the perspective of IT implementation. It's crucial to quickly focus and keep pace with the flow of change. Companies can successfully internalize these technologies and create exemplary use cases by concentrating on unchanging things, such as the aforementioned data standardization, and applying generative AI on that foundation.

▶ This content is protected by the Copyright Act and is owned by the author or creator.

▶ Secondary processing and commercial use of the content without the author/creator's permission is prohibited.

- #SamsungBiologics

- #GenerativeAI

- #WorkflowAutomation

- #Biopharmaceuticals

- #SOP

- #CAPA

- #LLM

- #BrityAutomation

- #BrityWebChat

- #ChatGPT

Strategic Marketing Team at Samsung SDS

Had been in charge of digital transformation in samsungsds.com, solution page planning/operation, based on her work experiences in IT trend analysis, process innovation, and consulting business strategy, and is now in charge of content planning and marketing through trend/solution analysis for each main business sector of SDS.